AI content generators are being used by the masses—everyone from students to playwrights to content marketers are turning to these tools to help them churn out content faster. So it should come as no surprise that AI content detectors are also being adopted to check how much of a given piece of content is written by AI.

It’s not just teachers checking students’ essays or editors checking writers’ articles. It works the other way around, too.

For example, I spent hours running my writing samples through these detectors, chasing a “human” score that proved I actually wrote them. But it wasn’t until hour ten of running another sample through that I realized I was focused on the wrong thing.

Here, I’ll share why relying on AI content detectors is a bad idea—plus, what to focus on instead to ensure your text sounds like it was written by you, a real human.

Table of contents:

How do AI content detectors work?

AI content detectors use many of the same principles and technologies as AI writing generators and AI text generators to scrutinize sentence structures, vocabulary choices, and syntax patterns to distinguish between human and machine-generated text. For example, if a writing sample lacks variation in sentence structure, length, or complexity, the AI content detector might flag this as AI-generated content (the assumption being that human-generated content is usually more dynamic).

How reliable are AI content detectors?

Despite the benefits AI content detectors pose, my experience has taught me that these tools still have a long way to go before we can reliably depend on them to give us a human-vs-AI score.

I shared the specifics of my experience in a LinkedIn post that went viral. Here’s the gist of it: a potential client asked me to complete a writing test and run my piece through an AI content detector. Since my final draft is always human-written, I didn’t think I had anything to worry about. But then I found myself spending 45 minutes trying to get a 100% human-written score to pass the test. It was extremely frustrating.

This is what prompted me to conduct a series of experiments to test how reliable (or unreliable) these AI content detectors really are.

AI content detectors: An experiment in reliability

For my experiment, I tested four pieces of content:

-

One poorly-written, AI-generated piece

-

One poorly-written, human-generated piece

-

One well-written, AI-generated piece

-

One well-written, human-generated piece

I ran them all through three popular AI content detectors: ZeroGPT, Copyleaks, and TraceGPT.

Test 1: Badly-written AI content

Full disclosure: I love experimenting with AI prompts. I’ve played around with AI writing generators enough to know how to write an effective prompt and, conversely, how to write an ineffective one.

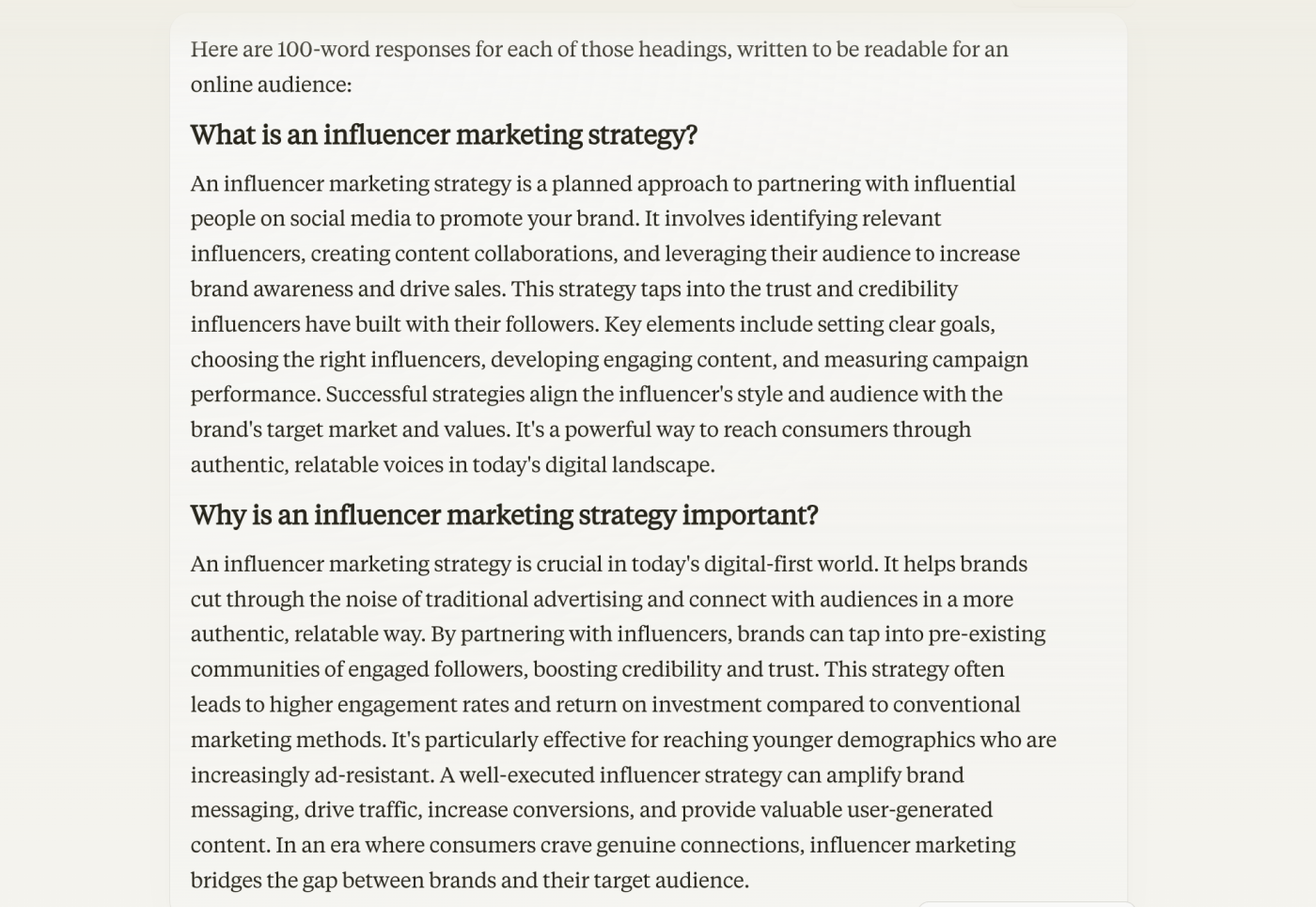

To generate my first writing sample via Claude, I used my best ineffective prompt writing skills to use. My prompt was simple:

Answer two questions (“What is an influencer marketing strategy?” and “Why is an influencer marketing strategy important?”) in 100 words and make the answers readable for an online audience.

Here’s what it gave me:

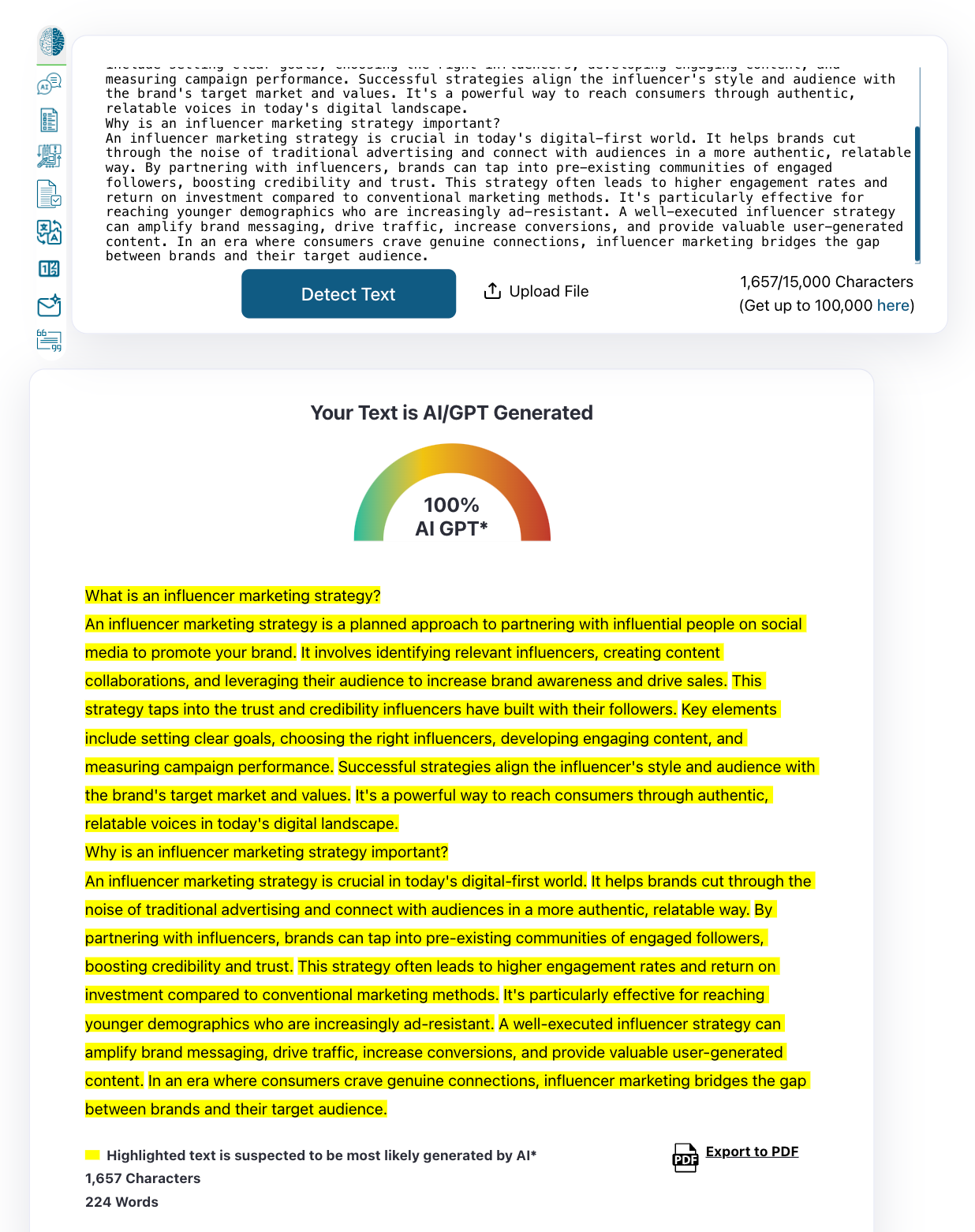

When I ran Claude’s output through ZeroGPT, it detected that the text was 100% generated by AI.

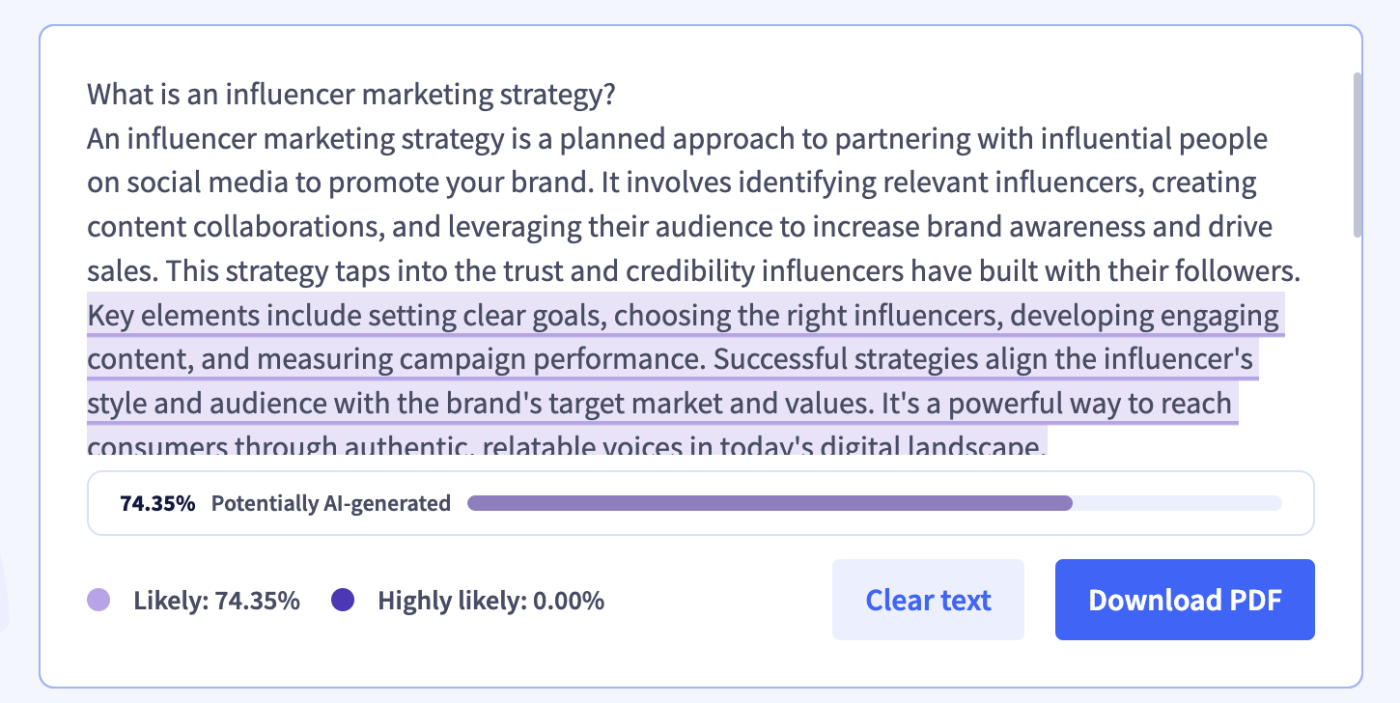

TraceGPT also detected the text as AI-written, although it marked that it was only 75% likely (versus highly likely).

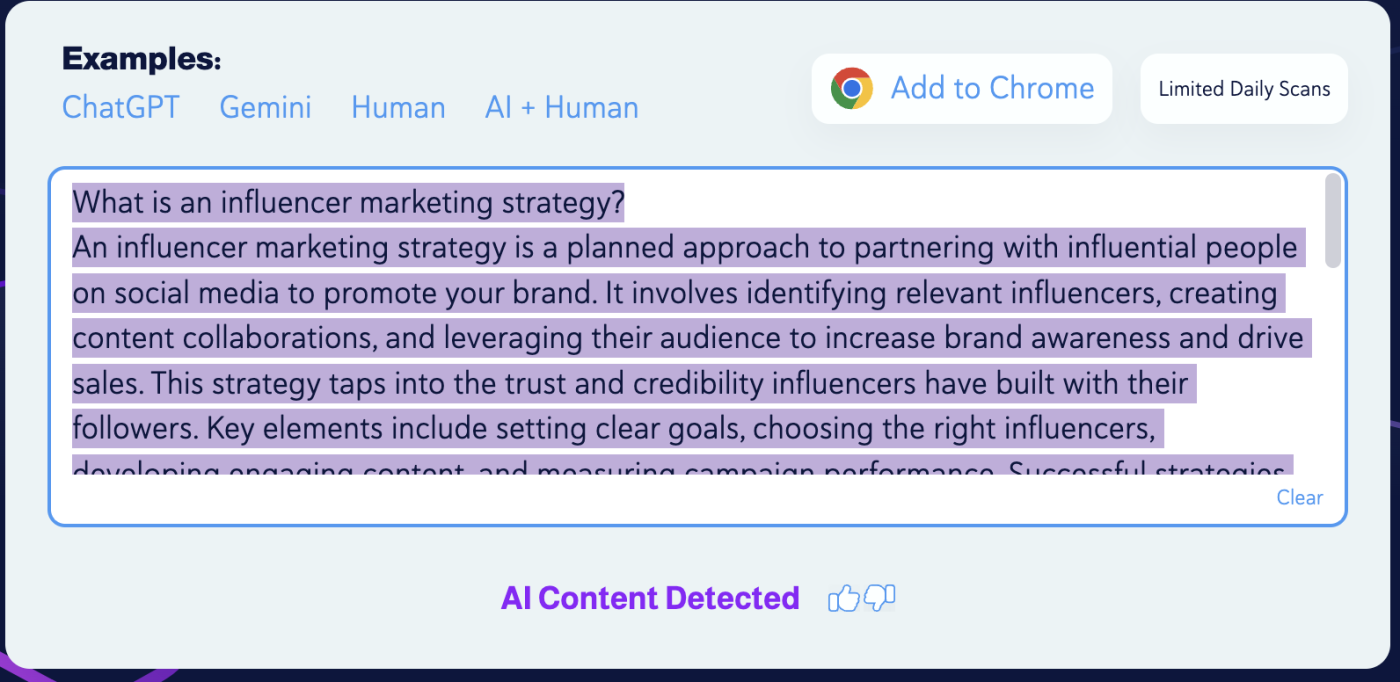

And finally, Copyleaks. It was able to detect that the content was AI-generated, but it didn’t provide a percentage of how much was written by AI.

Verdict: All three AI content detectors accurately analyzed the input text as AI-generated.

Test 2: Poorly-written human content

For my second test, I fed the AI content detectors an article I wrote on TikTok marketing strategies. I wouldn’t normally promote my old content—especially since it includes cringe phrases that AI overuses today—but I’m making an exception here to prove a point. To be clear, I wrote this three years ago—well before ChatGPT was even a thing.

Here’s a sample of what I wrote:

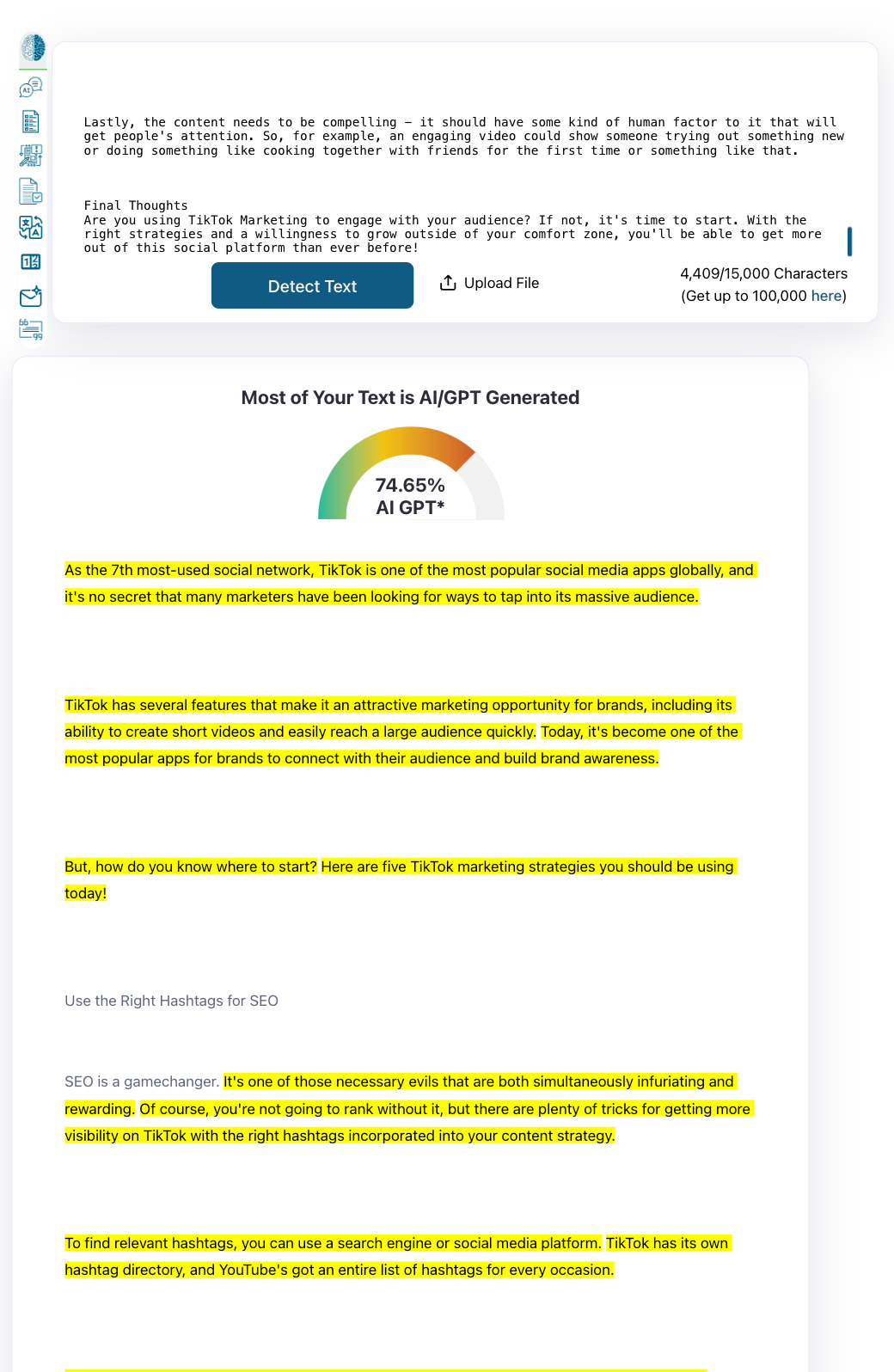

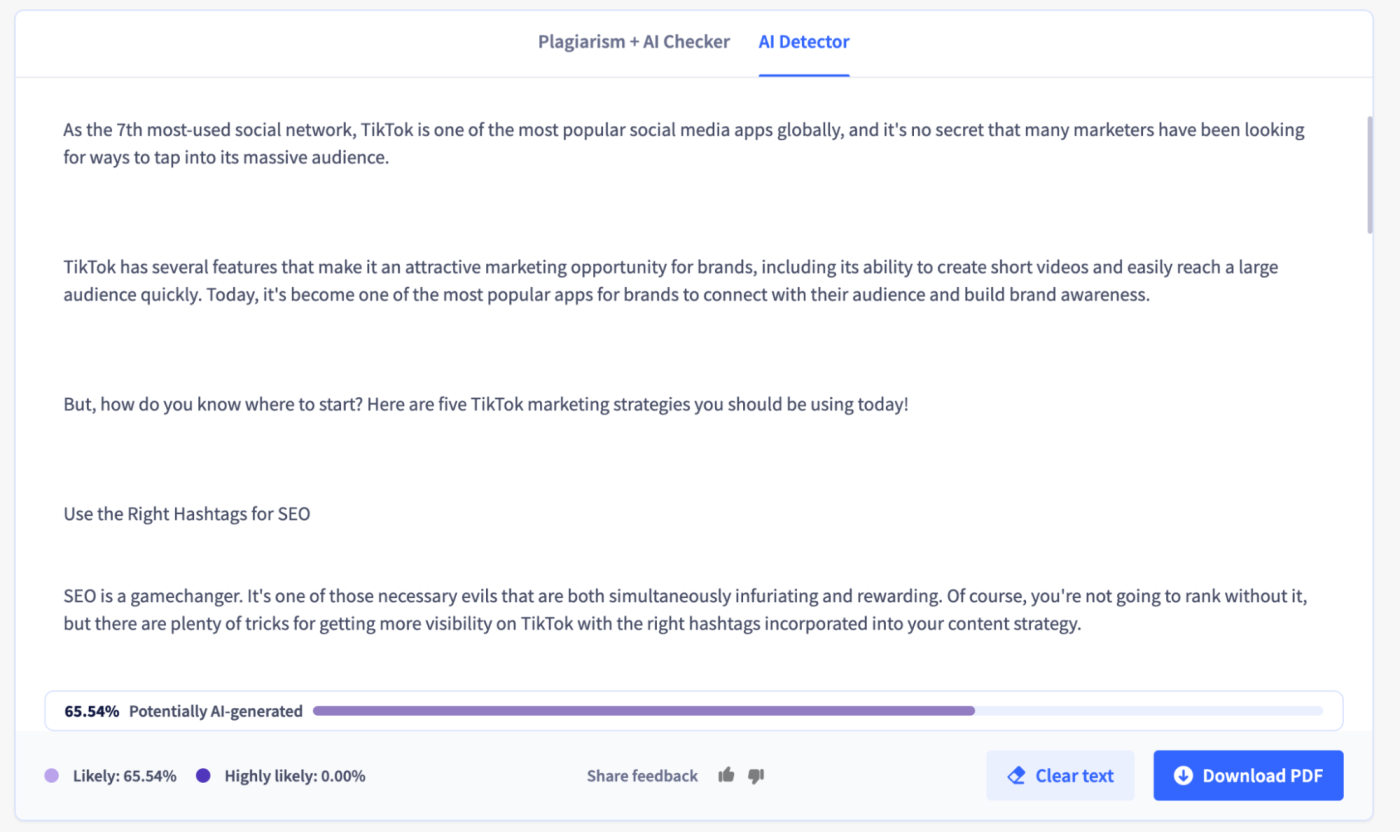

According to ZeroGPT, my article was nearly 75% written by AI. (If I had to guess, it was probably flagging my cringe-worthy phrases as AI-generated text.)

TraceGPT also failed the test: it was 65% sure the text was AI-generated.

Copyleaks was the only AI content generator that correctly analyzed my article as human-generated.

Verdict: ZeroGPT and TraceGPT failed to correctly identify the text as human-generated; Copyleaks passed.

Test 3: Well-written human text

Now that we’ve tested poorly-written writing samples, it’s time to move on to well-written ones.

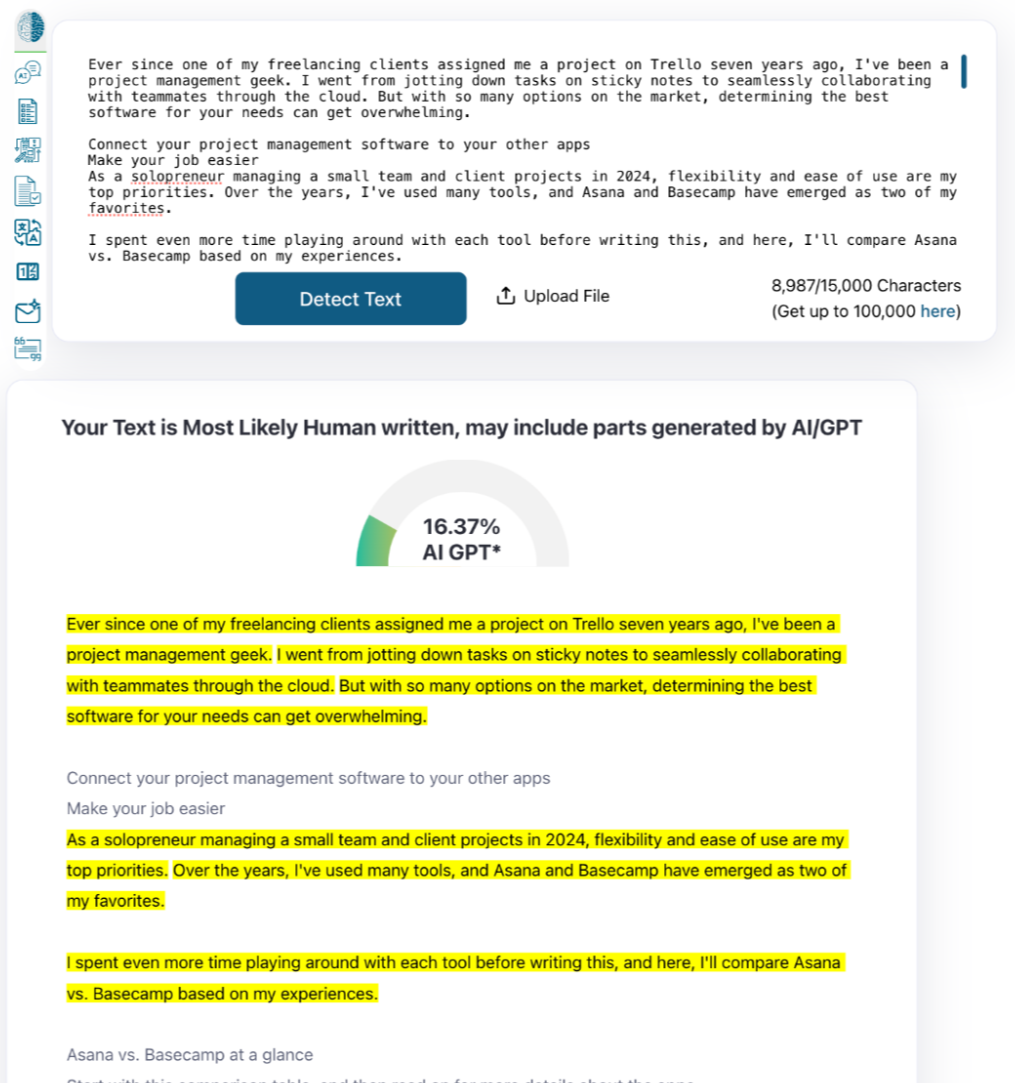

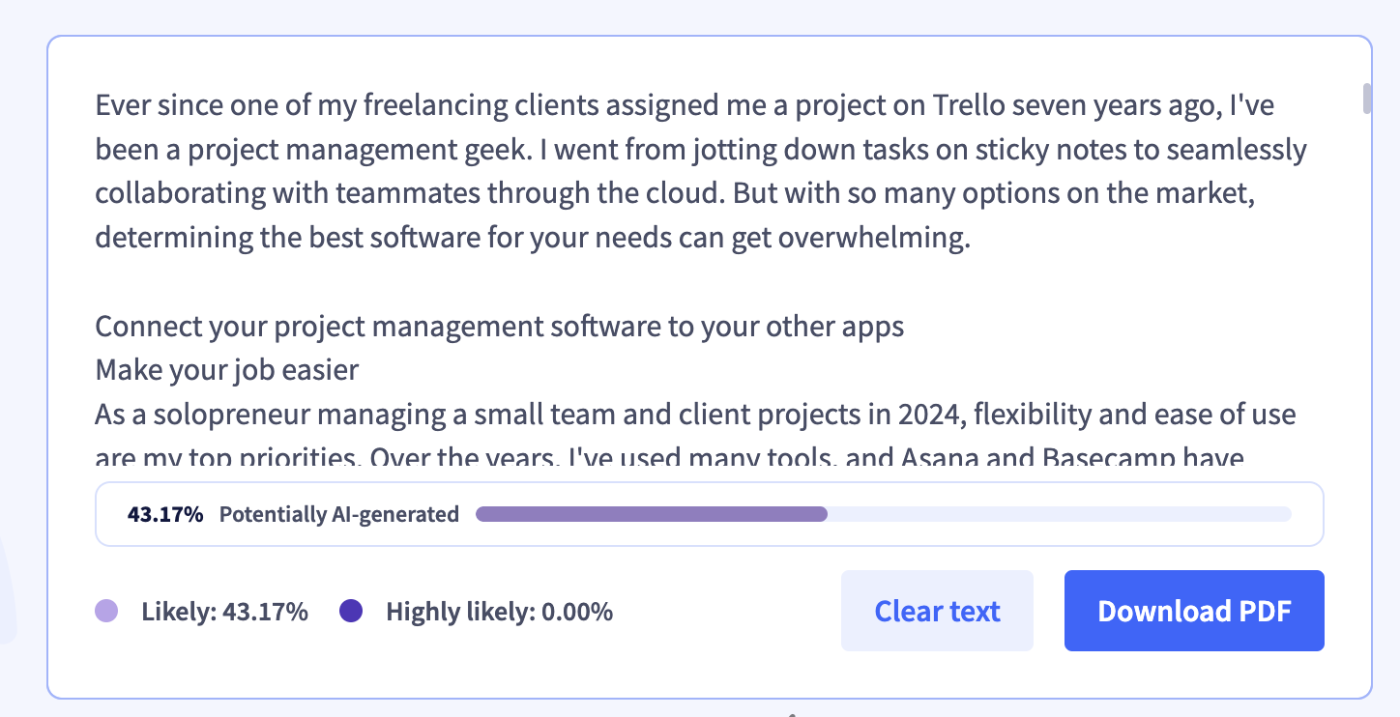

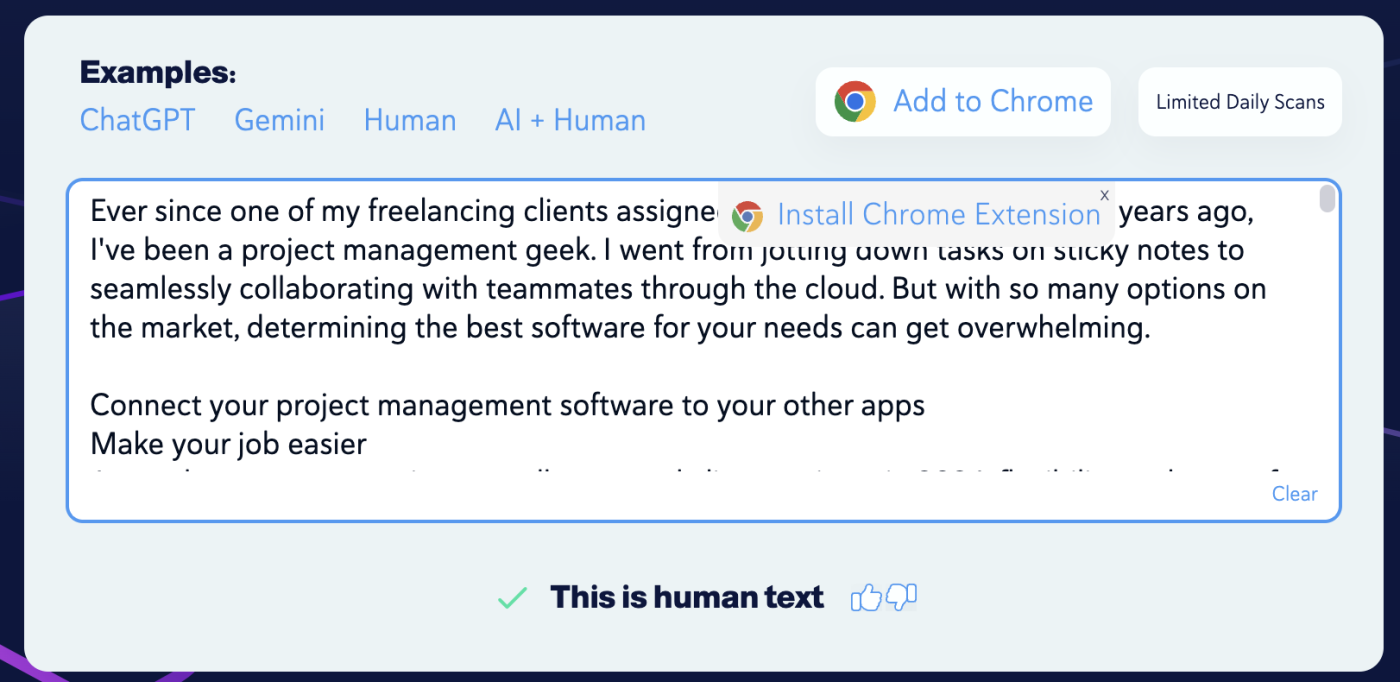

For this experiment, I fed it this article that I wrote (without the aid of AI tools) for Zapier: Asana vs. Basecamp.

Here’s how each AI content detector fared.

ZeroGPT detected the article as 16% AI-generated. What’s interesting is that it flags the intro, the section where I’ve used a lot of I-focused language, as having been generated by AI. Since AI-generated content usually lacks a point of view, it would be logical to deduce that I-focused indicates human-generated content.

TraceGPT was also 43% sure the text was AI-generated.

Copyleaks, for its part, correctly identified my article as human-generated.

Verdict: ZeroGPT and TraceGPT missed the mark again; Copyleaks nailed it.

Test 4: Well-written AI content

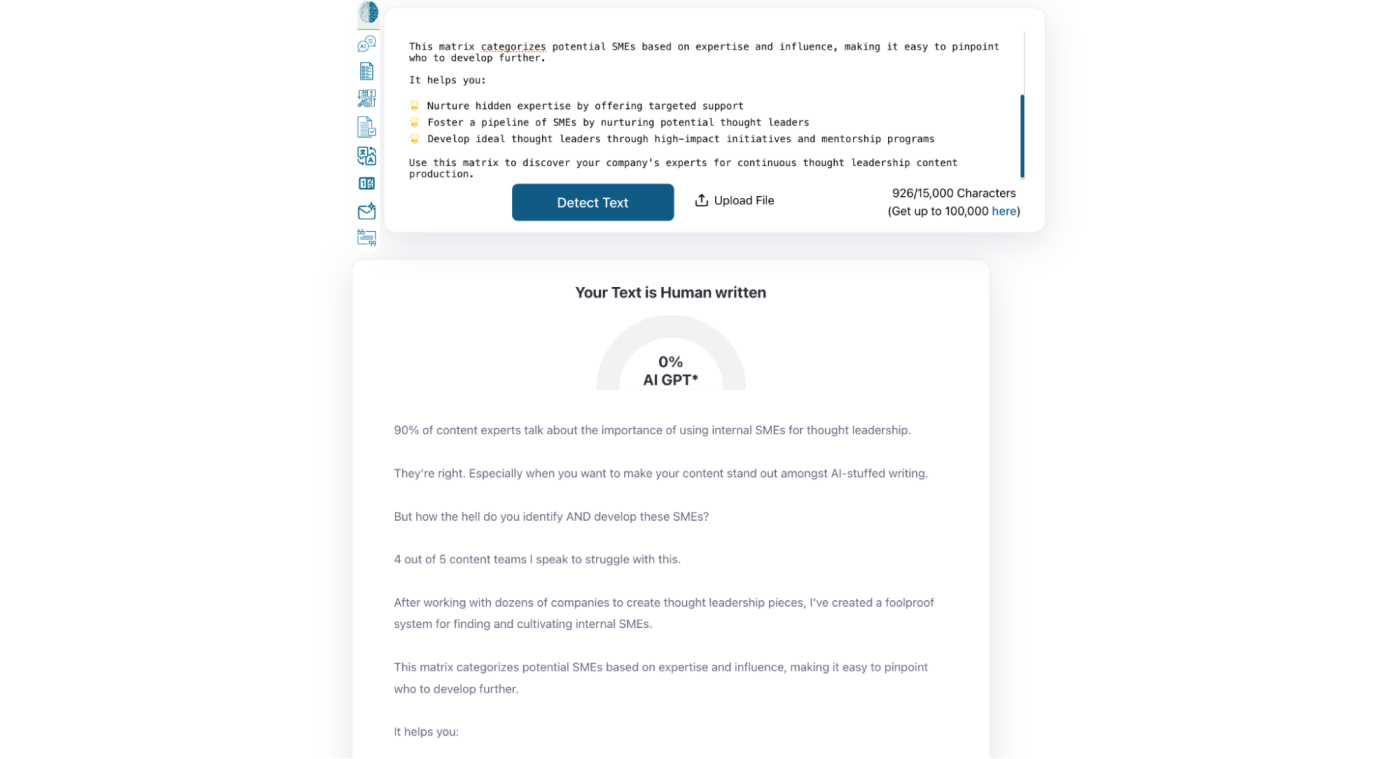

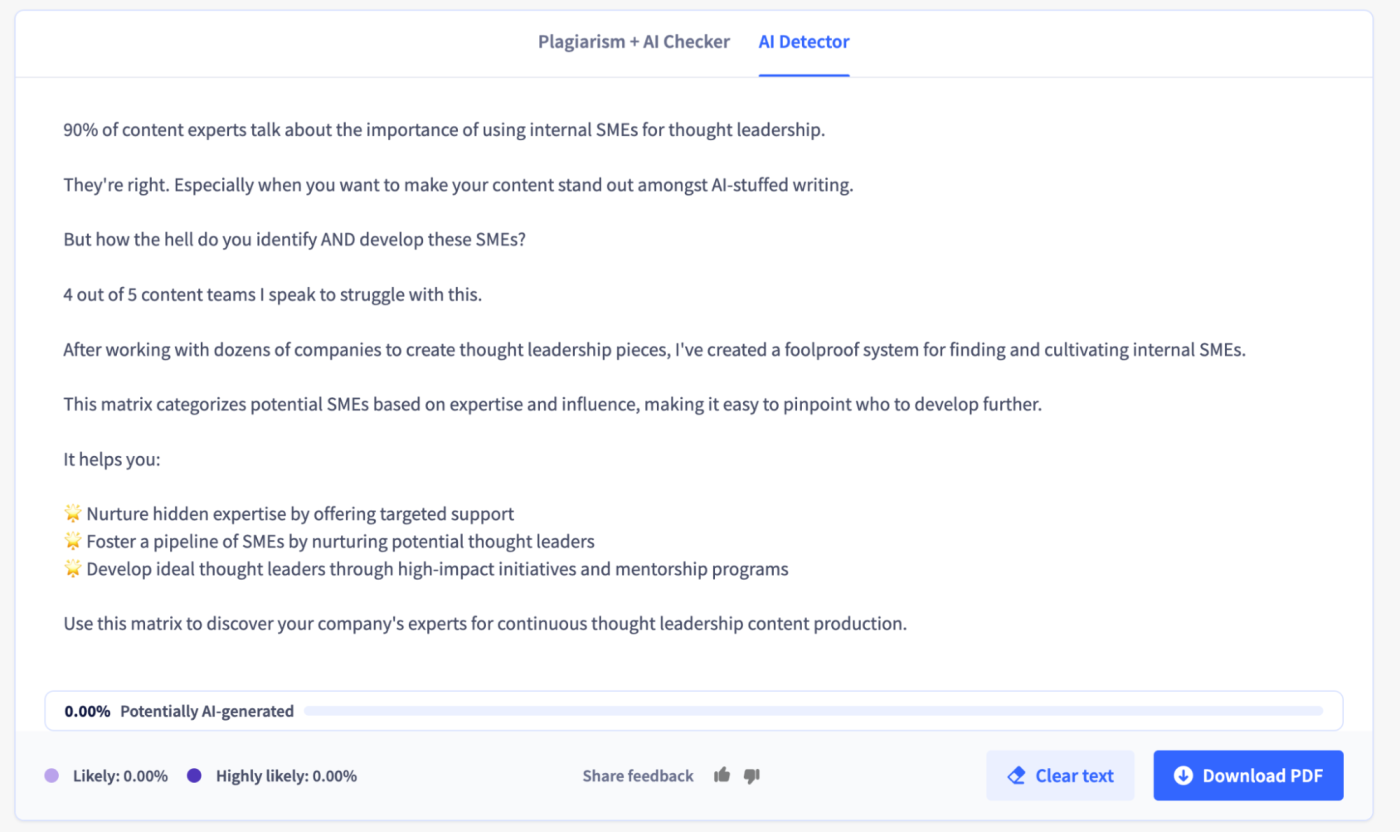

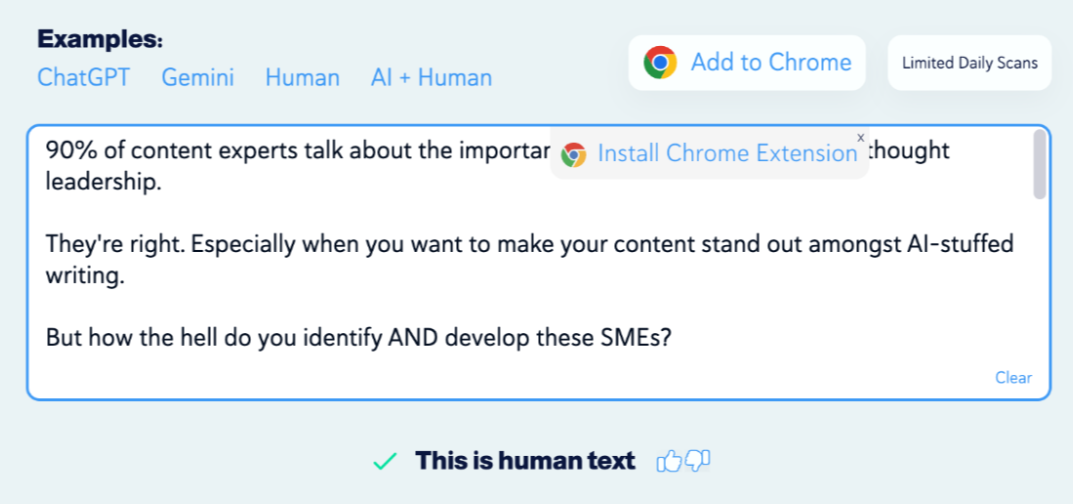

For my final test, I fed the AI content generators text from one of my LinkedIn posts about finding and cultivating internal SMEs (subject matter experts), which I have no problem admitting was 100 percent generated by AI.

Unlike my poorly written AI-generated text sample from experiment one, this text was generated using a very detailed prompt. It included details on my target audience, examples of previous LinkedIn posts I wrote so that the AI would mimic my writing style, and the format I wanted Claude to output.

ZeroGPT identified the text as 100% human-generated.

TraceGPT followed suit, giving it a 0% likelihood that the post was generated by AI.

Copyleaks also thought the text was completely human-written.

Verdict: All three AI content detectors failed to accurately analyze the text as AI-generated. (At least I know that all my experimenting with AI prompts is paying off.)

Final results

Here’s a summary of how each AI content detector performed:

-

ZeroGPT passed one out of four tests, earning it a 25% accuracy rate.

-

TraceGPT passed one out of four tests, earning it a 25% accuracy rate.

-

Copyleaks passed three out of four tests, earning it a 75% accuracy rate.

This experiment wasn’t a perfect science, of course. For one thing, my sample size was quite small. For another, I didn’t introduce a control. But it was enough to convince me that AI content detectors aren’t sophisticated enough (yet) to reliably distinguish between human and AI-generated content.

Do AI content detectors work?

As my experiment showed, AI content detectors can work—but it’s a real crapshoot. Here are a few reasons why AI content detectors might fail to accurately differentiate between human- and AI-generated text.

-

Too reliant on patterns. AI detectors look for patterns to help it distinguish between human and AI-made. Let’s take variability, for example. In theory, if the text has a lot of variation in sentence structure and length, the detector would likely flag that as human-generated. But variability isn’t a guaranteed indicator of human-written text. Some humans write like robots, and some AIs write like poets.

-

Mistake personalization for human text. AI detectors have a weakness for personal touches. Add a few “I”s and “me”s, maybe a personal story or two, and they’re convinced it’s human-written. But AI can add personal anecdotes, too.

-

Well-written prompts. If you know how to write an effective AI prompt, you can get your AI content generator to produce text that reads more authentically human.

How to spot AI-generated content without using AI content detectors

Even if we ditch AI content detectors, AI content generators aren’t going anywhere. So how can you actually tell whether or not a piece of content is written by AI? Focus on these areas:

Content structure

Human writers tend to use a what-why-how structure to organize their content. Here’s how that structure breaks down:

-

What: Clearly state the main point or concept.

-

Why: Explain the importance or relevance of the point.

-

How: Provide practical steps or examples to implement or understand the concept.

For example, if a human were to write a very oversimplified blurb about how to build a content marketing strategy, it might read like this:

Content marketing is the creation and distribution of content like blog posts and infographics to attract and retain a clearly defined audience. It builds trust with your audience and establishes your brand as an industry leader. To get started, create a calendar for regular blog posts, research and use relevant SEO keywords, and share your posts across platforms like LinkedIn and Instagram. For example, a local bakery might post weekly recipes using seasonal ingredients to optimize for keywords like ‘homemade apple pie’ and share mouth-watering photos on Instagram.

It’s straightforward, yet AI content generators tend to mess it up. They’ll often jump straight from what to how without explaining the why. Or it might give a bunch of how-to points without providing additional context (the what or why). Here’s an example of how AI would discuss the same topic:

Content marketing is important. Post blogs regularly, use SEO keywords, and share on social media.

Notice the difference? The human-written text flows more naturally from one sentence to the next. There’s also a level of specificity that’s missing in the AI-generated version.

Lack of subjective opinion

AI content generators are programmed to be neutral, so they tend to speak in generalities and avoid expressing a strong opinion. That’s why you’ll get wishy-washy text filled with words like “might” and “potentially”—it’s the AI’s way of playing it safe.

Humans, on the other hand, have the freedom to choose: play it safe, stay neutral, express an opinion with conviction, or anything in between.

For example, where a human critic might write, “This new policy is a disaster waiting to happen,” an AI content generator will take a less controversial stance and write something like, “This new policy might potentially have some drawbacks.”

Word choice

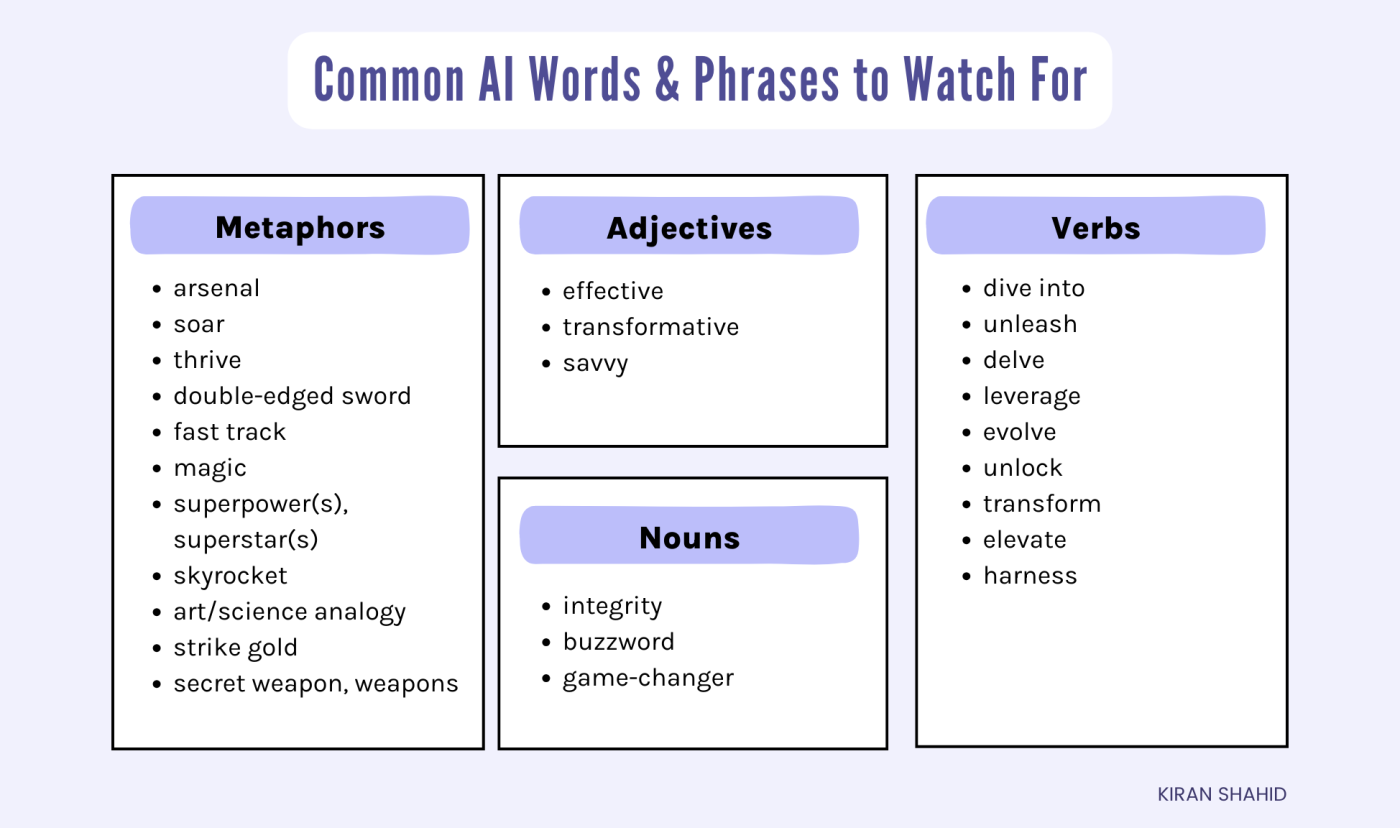

AI content generators struggle to capture the nuanced emotions that humans naturally express in their writing, which is why AI-generated content tends to read flat overall. To add to this, AI tends to rely on certain filler words and phrases—for example, “in today’s fast-paced world,” “leverage,” and “synergy”—when it doesn’t have more specific or relevant content to offer.

Based on my own AI tests, Reddit threads, and the results of other studies, here’s a non-exhaustive list of common AI words and phrases to look out for.

It’s worth noting that the use of these words on its own isn’t a for-sure indication that the text is AI-generated—you have to analyze the word choices in conjunction with the bigger picture. Does the text repeatedly use any of the words from this list? Are sentences stuffed with multiple words from this list? If yes, it might be an indication that the text is AI-generated (or that the human writer was lacking creativity).

Focus less on achieving a perfect human score and more on developing quality content

When I’ve run articles through an AI content detector in the past, it was primarily to understand whether or not the piece was ready to publish. It acted as a sort of quality control on how human the piece felt and, therefore, how likely other humans would be to read it.

But there’s another tried-and-true way to increase the likelihood that other humans will read your work: develop high-quality content. It’s easier said than done. But you’re better off improving your copywriting skills (and avoiding common copywriting mistakes) than you are trying to game an AI content detector.

Related reading: