Understanding why massive AI models behave the way they do is as much an art as it is a science. Even the most accomplished technical experts can become perplexed by the unexpected abilities of large language models (LLMs), the fundamental building blocks of AI chatbots like ChatGPT.

It’s not surprising, then, that prompt engineering has emerged as a hot job in generative AI, with some organizations offering lucrative salaries of up to $335,000 to attract top-tier candidates.

But what even is this job? Here, I’ll cover everything you need to know about prompt engineering and how you can become one without a technical background.

Table of contents:

What is prompt engineering?

Prompt engineering is the process of carefully crafting prompts (instructions) with precise verbs and vocabulary to improve machine-generated outputs in ways that are reproducible.

Professional prompt engineers spend their days trying to figure out what makes AI tick and how to align AI behavior with human intent. But prompt engineering isn’t limited to people who are paid to do this. If you’ve ever refined a prompt to get ChatGPT, for example, to fine-tune its responses, you’ve done some prompt engineering.

Why is prompt engineering important?

In a way, a skilled prompt engineer compensates for an AI’s limitations: AI chatbots can be great at syntax and vocabulary, but have no first-hand experience of the world, making AI development a multidisciplinary endeavor.

Some experts question the value of the role longer term, however, as it becomes possible to get better outputs from clumsier prompts. But there are countless use cases for generative tech, and quality standards for AI outputs will keep going up. This suggests that prompt engineering as a job (or at least a function within a job) continues to be valuable and won’t be going away any time soon.

Why prompt engineering isn’t strictly for technical people

While exceptional prompt engineers possess a rare combination of discipline and curiosity, when developing good prompts, they also leverage universal skills that aren’t confined to the domain of computer science.

The rise of prompt engineering is opening up certain aspects of generative AI development to creative people with a more diverse skill set, and a lot of it has to do with no-code innovations. Posting in January 2023, Andrej Karpathy, Tesla’s former director of AI, stated that the “hottest new programming language is English.”

The hottest new programming language is English

— Andrej Karpathy (@karpathy) January 24, 2023

While some organizations—like the Boston Children’s Hospital—have posted job ads seeking prompt engineers with several years of engineering, developer, or coding experience, a strong engineering background isn’t always a requirement for the role.

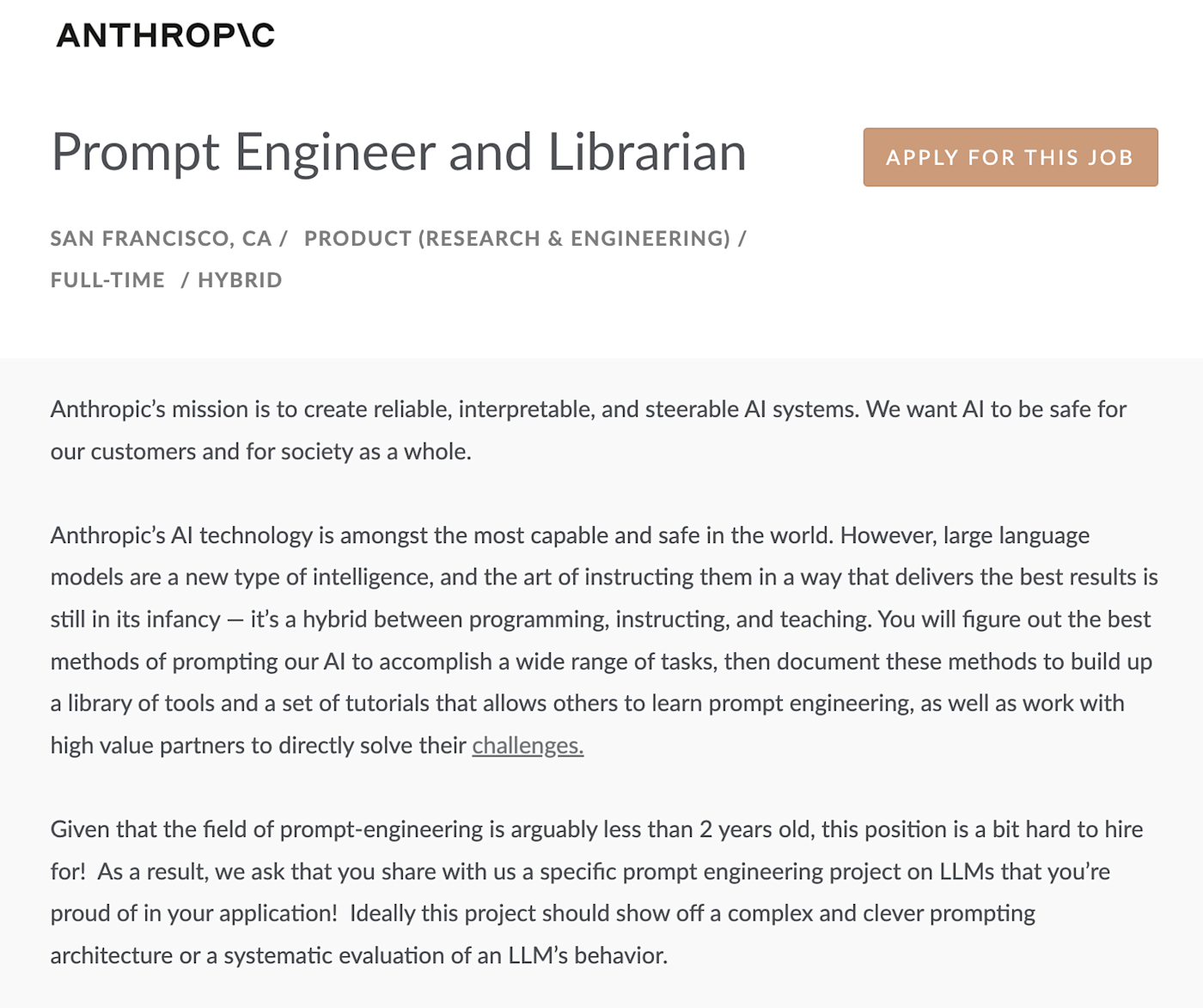

Anna Bernstein, for example, was a freelance writer and historical research assistant before she became a prompt engineer at Copy.ai. And in its job posting for a prompt engineer, Anthropic mentions that a “high level familiarity” with the operation of LLMs is desirable, but that they encourage candidates to apply “even if they do not meet all of the criteria.”

Prompt engineering techniques and examples

Prompt engineering is constantly evolving as researchers develop new techniques and strategies. While not all these techniques will work with every LLM—and some get pretty advanced—here are a few of the big methods that every aspiring prompt engineer should be familiar with.

Few-shot prompting

In machine learning, a “zero-shot” prompt is where you give no examples whatsoever, while a “few-shot prompt” is where you give the model a couple of examples of what you expect it to do. It can be an incredibly powerful way to steer an LLM as well as demonstrate how you want data formatted.

Chain-of-thought (CoT) prompting

With chain-of-thought prompting, you ask the language model to explain its reasoning. Research has shown that in sufficiently large models, it can be very effective at getting the right answers to math, reasoning, and other logic problems.

Zero-shot chain-of-thought prompting is as simple as adding “explain your reasoning” to the end of any complex prompt.

Few-shot chain-of-thought prompting can be even more effective. It involves giving the model examples of the logical steps you expect it to make.

Least-to-most prompting

Least-to-most prompting is similar to chain-of-thought prompting, but it involves breaking a problem down into smaller subproblems and prompting the AI to solve each one sequentially. It’s useful when you require an LLM to do something that takes multiple steps where the subsequent steps depend on prior answers. In the example below, the AI gets this wrong.

However, by breaking down the problem into two discrete steps and asking the model to solve each one separately, it can reach the right (if weird) answer.

Self-consistency

Self-consistency is an advanced form of chain-of-thought prompting developed by Wang et al. (2002). It involves giving the AI multiple examples of the different kinds of reasoning that will lead it to the correct answer and then selecting the most consistent answer it gives.

For example, for answering the kinds of multi-hop questions in the HotpotQA dataset, the researches put this at the start of the prompt:

Q: Which magazine was started first: Arthur’s Magazine or First for Women?

A: Arthur’s Magazine started in 1844. First for Women started in 1989. So Arthur’s Magazine was started first. The answer is Arthur’s Magazine.

Q: The Oberoi family is part of a hotel company that has a head office in what city?

A: The Oberoi family is part of the hotel company called The Oberoi Group. The Oberoi Group has its head office in Delhi. The answer is Delhi.

Q: What nationality was James Henry Miller’s wife?

A: James Henry Miller’s wife is June Miller. June Miller is an American. The answer is American.

Q: The Dutch-Belgian television series that “House of Anubis” was based on first aired in what year?

A: “House of Anubis” is based on the Dutch–Belgian television series Het Huis Anubis. Het Huis Anubis first aired in September 2006. The answer is 2006.

The researchers used similar prompts to improve performance on other logic, reasoning, and mathematical benchmarks.

Even more advanced prompting techniques

Once you get past things like self-consistency, you get into even more advanced prompt engineering techniques that require you to have a much deeper understanding of how large language models work, as well be able to work with structured data and even prompt them directly using the command line.

A lot of these techniques are being developed by researchers to improve LLM performance on specific benchmarks and figure out new ways to develop, train, and work with AI models. While they may be important in the future, they won’t necessarily help you prompt ChatGPT right now.

Some of these techniques are:

If you want to dive deeper into the new frontiers of prompt engineering and model design, check out resources like DAIR.AI’s prompt engineering guide.

5 non-tech prompt engineering skills (that you probably already have)

The day-to-day activities of a prompt engineer should be of interest to anyone who interacts with generative AI for two very good reasons: (1) It illuminates the tech’s capabilities and limitations. (2) It gives people a good understanding of how they can use skills they already possess to have better conversations with AI.

Here’s a look at five non-tech skills contributing to the development of AI technology via the multidisciplinary field of prompt engineering.

1. Communication

Like project managers, teachers, or anybody who regularly briefs other people on how to successfully complete a task, prompt engineers need to be good at giving instructions. Most people need a lot of examples to fully understand instructions, and the same is true for AI.

Edward Tian, who built GPTZero, an AI detection tool that helps uncover whether a high school essay was written by AI, shows examples to large language models, so it can write using different voices.

Of course, Tian is a machine learning engineer with deep technical skills, but this approach can be used by anyone who’s developing a prompt and wants a chatbot to write in a particular way, whether it’s as a seasoned professional or an elementary school student.

2. Subject matter expertise

Many prompt engineers are responsible for tuning a chatbot for a specific use case, such as healthcare research.

This is why prompt engineering job postings are cropping up requesting industry-specific expertise. For example, Mishcon de Reya LLP, a British Law Firm, had a job opening for a GPT Legal Prompt Engineer. They were seeking candidates who have “a deep understanding of legal practice.”

Subject matter expertise, whether it’s in healthcare, law, marketing, or carpentry, is useful for crafting powerful prompts. The devil’s in the details, and real-world experience counts for a lot when talking with AI.

3. Language

To get the AI to succeed, it needs to be fed with intent. That’s why people who are adept at using verbs, vocabulary, and tenses to express an overarching goal have the wherewithal to improve AI performance.

When Anna Bernstein started her job at Copy.ai, she found it useful to see her prompts as a kind of magical spell: one wrong word produces a very different outcome than intended. “As a poet, the role […] feeds into my obsessive nature with approaching language. It’s a really strange intersection of my literary background and analytical thinking,” she said in this Business Insider interview.

Instead of using programming languages, AI prompting uses prose, which means that people should unleash their inner linguistics enthusiast when developing prompts.

4. Critical thinking

Generative AI is great at synthesizing vast amounts of information, but it can hallucinate (that’s a real technical term). AI hallucinations occur when a chatbot was trained or designed with poor quality or insufficient data. When a chatbot hallucinates, it simply spews out false information (in a rather authoritative, convincing way).

Prompt engineers poke at this weakness and then train the bot to become better. For example, Riley Goodside, a prompt engineer at the AI startup Scale AI, got the wrong answer when he asked a chatbot the following question: “What NFL team won the Super Bowl in the year Justin Bieber was born?” He then asked the chatbot to list a chain of step-by-step logical deductions for producing the answer. Eventually, it corrected its own error.

This underscores that having the right level of familiarity with the subject matter is key: it’s probably not a good idea for someone to have a chatbot produce something they can’t reliably fact-check.

5. Creativity

Trying new things is the very definition of creativity, and it’s also the essence of good prompt engineering. Anthropic’s job posting states that the company is looking for a prompt engineer who has “a creative hacker spirit,” among other qualifications.

Yes, being precise with language is important, but a little experimentation also needs to be thrown in. The larger the model, the greater the complexity, and in turn, the higher the potential for unexpected, but potentially amazing results.

By trying out a variety of prompts and then refining those instructions based on the results, generative AI users can increase the probability of coming up with something truly unique.

Prompt engineering tips and best practices

Prompt engineering is all about taking a logical approach to creating prompts that guides an AI model into giving you the most correct response possible. Simply bearing that in mind, slowing down, and structuring your prompt logically is the most important bit of advice I can give you.

Otherwise, some things to consider when you’re creating prompts:

-

The more barebones the LLM you use, the more effective advanced forms of prompt engineering are likely to be. ChatGPT—especially if you use GPT-4—does a lot to parse and understand your prompts and decide how to approach it, so your attempts to steer it may not work as well as if you use something like Llama 2 70B running locally on your own computer.

-

A lot of advanced prompt engineering techniques involve doing more and pushing the LLM further, rather than selecting better words. You give the AI more examples, ask it to break things down into more steps, or average out the results of multiple attempts. If you find yourself getting stuck, consider adding another example or breaking the task down into even more discrete steps.

-

Huge amounts of advanced prompt engineering is about trying to overcome every LLM’s limited ability to actually reason through a complex problem. While you can explore CoT, ToT, or any other advanced technique, for most one-off situations, you’ll find it much faster to break the problem down into individual steps yourself—rather than trying to find the prompt that will get the AI to do it itself.

-

As capable as AIs now seem, there are some logical reasoning tasks that no amount of careful prompt engineering will get them to manage. Part of prompt engineering is recognizing when an LLM is the wrong tool for the job, and finding a way to integrate the right tool with the AI—perhaps using something like Zapier.

Bring your talents to your prompts

Knowing the techniques and strategies that prompt engineers use helps all types of generative AI users. It gives people a better understanding of how to structure their prompts by leveraging their own creativity, expertise, and critical thinking.

Understanding prompt engineering can also help people identify and troubleshoot issues that may arise in the prompt-response process—a valuable approach for anyone who’s looking to make the most out of generative AI.

Related reading:

This article was originally published in May 2023. The most recent update, with contributions from Harry Guinness, was in April 2024.