Decision Trees are powerful and interpretable machine learning models. They allow us to make decisions based on a series of if-else conditions. To extract decision rules from a Scikit-Learn Decision Tree you can use the export_text() function or by traversing the tree_ attribute programmatically.

In this blog, we’re going to talk about how to extract and interpret decision rules from a Scikit-Learn Decision Tree. We’ll also walk you through different Python Codes to visualize and print the decision rules which can be read by a human. So let’s get started!

Table of Contents

Extraction of decision rules from decision trees is useful for:

- Model Explainability: Helps to understand how a model makes predictions.

- Debugging: Helps to identify the potential biases in the training data.

- Rule-based systems: Helps in using decision rules for automated decision-making, which is outside of machine learning models.

Given below are the steps given to extract decision rules:

Step 1: Train a Decision Tree Model

For the first step, we are going to train a decision tree classifier on the Iris dataset.

Example:

Output:

Explanation:

The above code is used to load the Iris dataset. It splits the dataset into training and testing sets. It then trains a Decision Tree Classifier with a maximum depth of 3. At last, it prints the model’s training and testing accuracy.

Step 2: Visualizing the Decision Tree

Before we extract the decision rules, let’s visualize the tree structure:

Example:

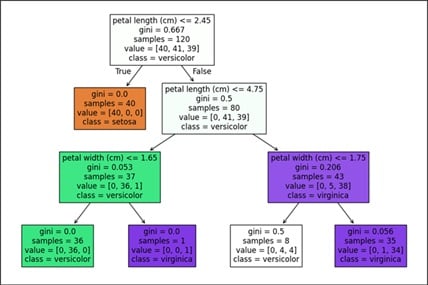

Output:

Explanation:

The above code is used to visualize the trained Decision Tree Classifier using plot_tree(). It helps to display feature names, class names, and color-filled nodes in a matplotlib plot.

Now, let’s talk about the extraction of the decision rules in a human readable format.

Example:

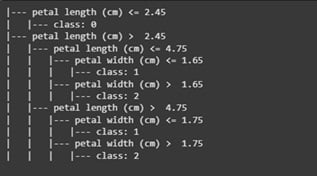

Output:

Explanation:

The above code is used to extract and print the human-readable decision rules of the trained Decision Tree Classifier. It used export_text(), and also includes feature names.

For extracting rules in Python’s if-else format, Scikit-learn provides a way to convert the tree into a Python script.

Example:

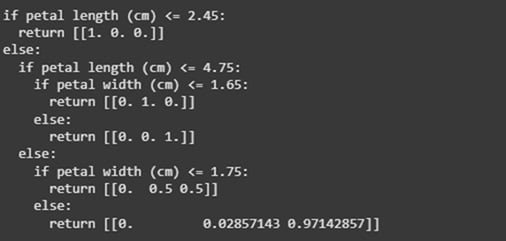

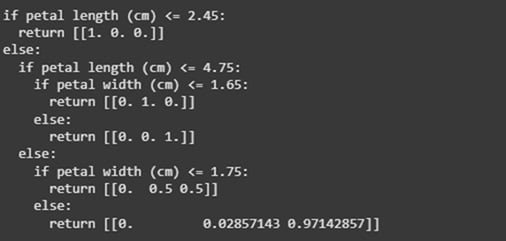

Output:

Explanation:

The above code is used to recursively extract and print the decision rules from a trained Decision Tree Classifier. This helps to display conditions based on feature thresholds and corresponding outputs.

Step 5: Converting Rules into a Pandas DataFrame

For getting a more structured view, we’re going to extract the decision rules into a Pandas DataFrame.

Example:

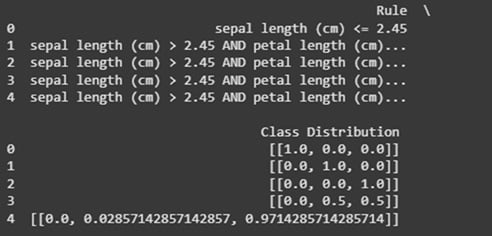

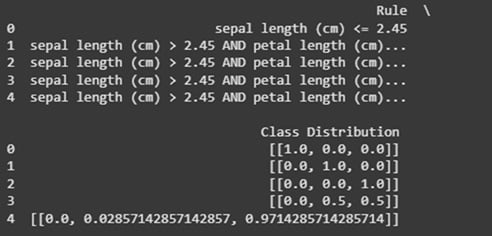

Output:

Explanation:

The above code is used to extract decision rules from a trained Decision Tree Classifier. It then formats them as logical conditions, and stores them in a DataFrame. This helps to show the rule-based class distributions.

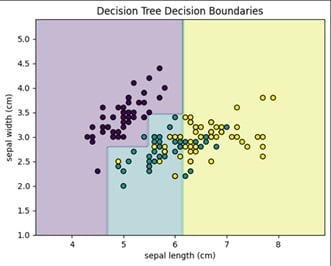

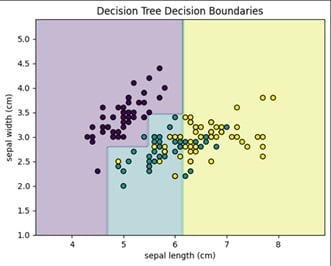

Visualizing Decision Boundaries of a Decision Tree

It is important to understand how a decision tree partitions the feature space. This is because it can be useful in interpreting the model’s behavior. For visualising the decision boundaries for a dataset, we can do it in two features.

Example:

Output:

Explanation:

The above code is used to train a Decision Tree Classifier on two features of the Iris dataset. It also visualizes its decision boundaries using a contour plot.

Feature Importance in Decision Trees

Decision trees help to provide insights into which features are most influential in making predictions.

Example:

Output:

Explanation:

The above code is used to extract and print the feature importance scores from the trained Decision Tree classifier. This is done for the first two features of the Iris dataset.

Hyperparameter Tuning for Decision Trees

Optimization of the tree parameters such as max_depth, min_samples_split, and min_samples_leaf helps to improve model performance.

Example:

Output:

Explanation:

The above code is used to perform hyperparameter tuning on a Decision Tree classifier. It uses GridSearchCV with 5-fold cross-validation, testing different values for max_depth, min_samples_split, and min_samples_leaf. It then prints the best combination of parameters.

Conclusion

In this blog, we have explored various techniques for working with decision trees in Scikit-Learn. This includes visualizing decision boundaries, understanding feature importance, hyperparameter tuning, extraction of decision rules, and saving them in structured formats. These techniques help to enhance interpretability and also allow seamless integration of decision trees into various machine learning pipelines.

FAQs

1. Why should I extract rules from a decision tree?

We should extract rules from a decision tree because it helps to improve the transparency of the model, and aids debugging. It also allows rule-based decision-making.

2. What function can I use to print decision rules as text?

You can use the function export_text() from Scikit-learn to print rules in a human-readable format.

3. How can I visualize a decision tree?

For visualizing a decision tree you can use plot_tree() from Scikit-learn’s sklrean.tree module. This helps to generate a visual representation.

4. How can I extract decision rules as Python code?

You can extract decision rules as Python code by accessing tree_ attribute of a Decision Tree. This helps to recursively iterate through the tree’s nodes and makes it easier for you to convert rules into if-else conditions in Python.

5. Can I store decision rules in a Pandas DataFrame?

Yes, you can store decision rules and extract them from a Pandas DataFrame, which makes interpretation and analysis easier.