Weight initialization is important for training and improving the performance of the model. You must have heard about weight initialization while working with neural networks in PyTorch. Although weights can be manually overridden later, PyTorch helps to initialize them automatically by default while defining the layers. Inappropriate initialization of weights might hinder learning or possibly prevent the model from being converging. So, how can we do it correctly?

In this blog, we’ll teach you why Initializing Model weights in PyTorch is important and how you can do it successfully. So let’s get started!

Table of Contents

Why is Weight Initialization Important?

Let’s talk about why weight initialization is important. When you are training a neural network, the process of learning the model is determined by the weights. If weights are incorrectly initialized, then

- The model might get stuck in local minima.

- The gradients (vanishing gradients) might become too small or too large (exploding gradients), which makes the training process of the model unstable.

- The network might take too long to converge or fail completely.

A good initialization strategy is always necessary to stabilize the training and speed up convergence. Now, let’s talk about how we can initialize weights in PyTorch!

Methods to Initialize Weights in PyTorch

Given below are the most commonly used approaches for weight initialization:

Method 1: Default PyTorch Initialization

By default, PyTorch initializes weights automatically while it defines the layers, but it can be overridden manually. For example

Example:

Output:

Explanation:

From the output, we can observe that PyTorch initializes the weights of nn.Linear layers use the Kaiming Uniform Initialization by default.

Method 2: Xavier (Glorot) Initialization in PyTorch

This initialization technique works well for sigmoid and tanh activation functions. This helps to ensure that the variance remains stable across the layers.

Example:

Output:

Explanation:

The above code is used to initialize the weights of all nn.linear layers in a PyTorch model. It uses a uniform distribution (-0.1 to 0.1) and sets the biases using a normal distribution. It has a mean of 0 and a standard deviation of 0.01.

Method 5: Custom Initialization in Python

Now, if you want to have full control over the initialization process, you can define custom functions.

Example:

Output:

Explanation:

The above code is used to initialize all nn.linear layer weights to 0.5 and biases to 0 in a PyTorch model.

Comparison of several modes of weight initialization using the same Neural Network(NN) architecture.

To compare different weight initialization methods by using the same Neural Network(NN) architecture in PyTorch, you can follow the steps given below:

Step 1: Define your Neural Network

You have to create a simple neural network that will be used for initialization methods.

Example:

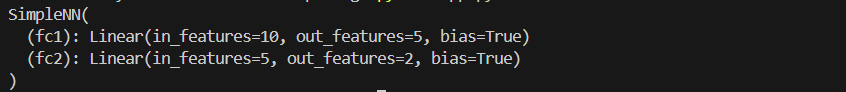

Explanation:

The above code snippet is used to define only the structure of the neural network. It doesn’t include any code that can illustrate the network, provide input data, or perform any operations that could give an output.

Step 2: Define different initialization Methods

You have to create functions to initialize weights using Xavier, Kaiming, and Normal distributions.

Example:

Explanation:

The above code is used to define three functions: init_xavier, init_kaiming, and init_normal. These 3 functions are designed to initialize the weights and biases of a neural network layer, especially nn.linear layer. However, as these functions are called, they don’t produce any direct output. They only help to modify the weights and biases of the input layer (m).

Step 3: Generate dummy data

You can use random data for training to keep things simple.

Example:

Explanation:

The above code doesn’t produce any output. Instead, it helps create and store data in the X, y, dataset, and dataloader variables. A dataloader can be used for iterating through batches of data while training a machine-learning model.

Step 4: Train the model with a variety of initializations

You have to train the same network multiple times, each with a different weight initialization method.

Example:

Output:

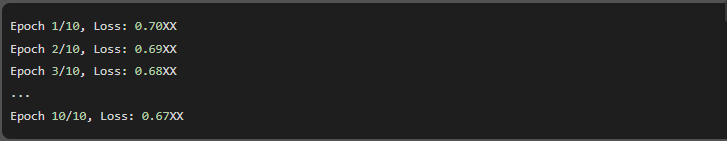

Explanation

The above code is used to train a simple SimpleNN model where a weight initialization is given. It uses CrossEntropyLoss and Adam optimizer while tracking and printing the average loss per epoch.

Step 5: Compare the results

You have to train the model using various initializations and compare the loss curves.

Example:

Output:

Explanation:

The above code is used to train a model. It uses 3 different weight initialization methods (Xavier, Kaiming, and Normal). It then plots and compares their loss curves over epochs.

Observations and Conclusion

By analyzing the loss curves, you can observe that

- Xavier Initialization is best for sigmoid/tanh activations.

- For ReLU-based networks, Kaiming Initialization works well.

- For deeper networks, Normal Initialization may not be optional.

Hence, this method will help you to compare various initialization techniques and choose the best technique for your neural network.

Comparison of Different Weight Initialization Strategies(Beyond Loss Curves)

To compare Different Weight Initialization strategies, you can follow the steps given below:

- Gradient Distribution

- Weight histograms

- Convergence speed

Below is an example of how to visualize weight distributions:

Example:

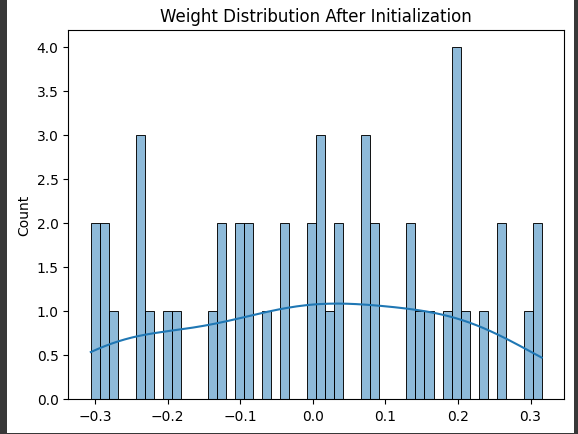

Output:

Explanation:

The above code is used to extract the weights of the first layer of the model. It then uses Seaborn to plot a histogram with a Kernel Density Estimate (KDE) which helps to visualize their distribution after initialization.

Best Practices for Weight Initialization

Here are some important steps you should follow during the time of initializing weights in PyTorch:

- You have to choose the right button on activation functions(e.g., Xavier for sigmoid/tanh, Kaiming for ReLU).

- You have to initialize the biases properly.

- You have to monitor gradients during training to ensure they’re not too large or too small.

- You have to experiment with different methods to find what works best for your model.

Conclusion

Weight initialization is a minor but crucial aspect of deep learning that can affect how quickly and efficiently your model learns. PyTorch has a variety of techniques for initializing weights, including custom methods and built-in functions. You can increase training stability and accelerate convergence by comprehending and utilizing the appropriate initialization strategies.

FAQs

1. Why is weight Initialization important in PyTorch?

PyTorch requires weight initialization to control activation and gradient scales because this practice avoids vanishing or exploding gradients which cause learning performance issues.

2. What are some common weight initialization techniques in PyTorch?

Some of the common weight initialization techniques in PyTorch include Xavier (Glorot) initialization, Kaiming (He) Initialization, and Unifrom/Normal random initialization, each of which is suitable for different activation functions.

3. How can I apply custom weight initialization in PyTorch?

To apply custom weight initialization in PyTorch, you can use model.apply(init_function), where init_function specifies the desired initialization method.

4. When should I use Xavier vs. Kaiming Initialization?

You can use Xavier Initialization for activation functions like sigmoid and tanh, and Kaiming initialization for ReLU-based functions, as it accounts for their systematic behavior.

5. How do I check if my weight initialization is working correctly?

To check if your weight initialization is running correctly you can use seaborn.histplot(weights.flatten()) or check for abnormal gradients using hooks or torch.nn.utils.clip_grad_norm_().