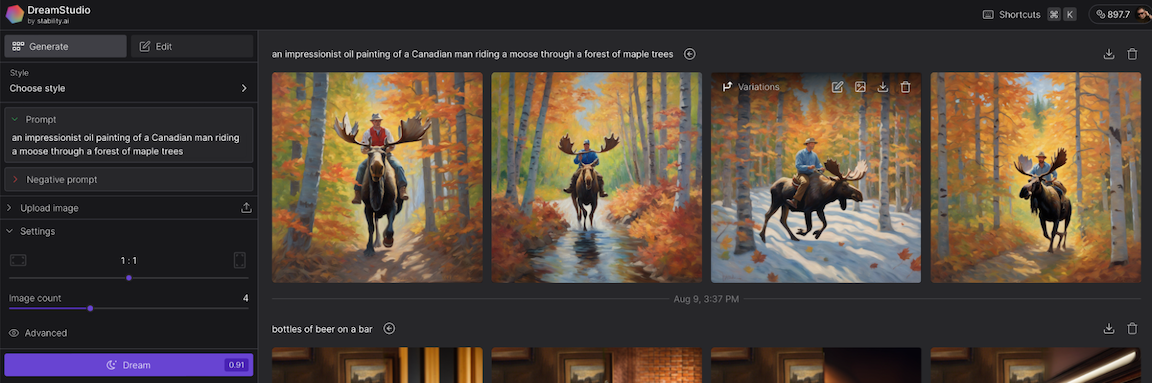

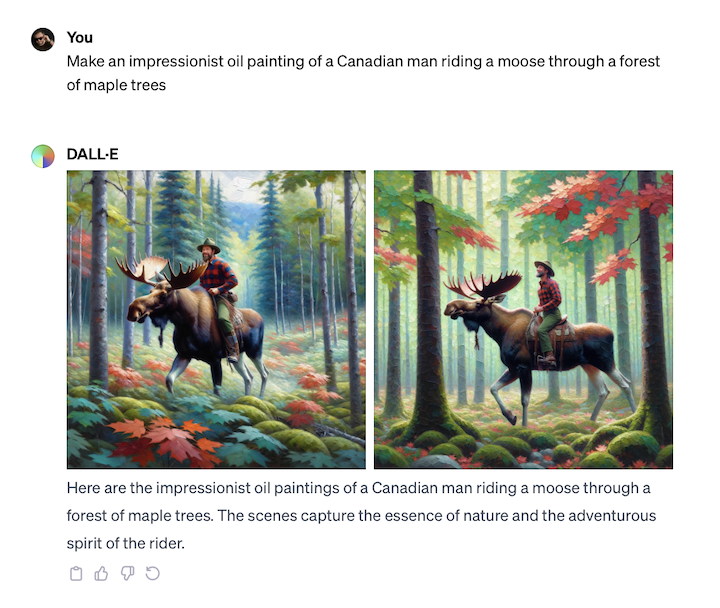

Stable Diffusion and DALL·E 3 are two of the best AI image generation models available right now—and they work in much the same way. Both models were trained on millions or billions of text-image pairs. This allows them to comprehend concepts like dogs, deerstalker hats, and dark moody lighting, and it’s how they can understand what a prompt like “an impressionist oil painting of a Canadian man riding a moose through a forest of maple trees” is actually asking them.

In addition to being AI models, Stable Diffusion and DALL·E 3 both have apps that are capable of taking a text prompt and generating a series of matching images.

So which of these apps should you use? Let’s dive in.

How do Stable Diffusion and DALL·E 3 work?

For image generation, Stable Diffusion and DALL·E 3 both rely on a process called diffusion. The image generator starts with a random field of noise, and then edits it in a series of steps to match its interpretation of the prompt. By starting with a different set of random noise each time, they can create different results from the same prompt. It’s kind of like looking up at a cloudy sky, finding a cloud that looks kind of like a dog, and then being able to snap your fingers to keep making it more and more dog-like.

Even though both models have similar technical underpinnings, there are plenty of differences between them.

Stability AI (the makers of Stable Diffusion) and OpenAI (the makers of DALL·E 3) have different philosophical approaches to how these kinds of AI tools should work. They were also trained on different data sets, with different design and implementation decisions made along the way. So although you can use both to do the same thing, they can give you totally different results.

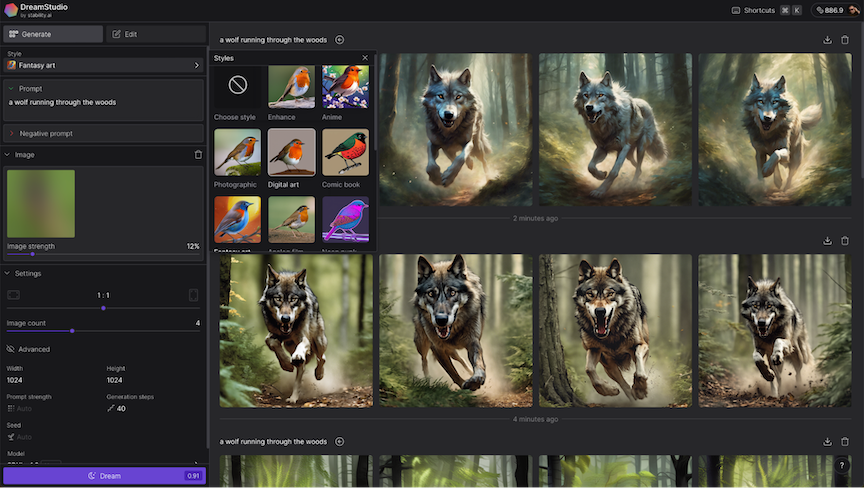

Here’s the prompt I mentioned above from Stable Diffusion:

And here it is from DALL·E 3:

Something else to keep in mind:

-

DALL·E 3 is only available through ChatGPT, the Bing Image Creator, Microsoft Paint, and other services using its API.

-

Stable Diffusion is actually a number of open source models. You can access it through Stability AI’s DreamStudio app (or, in a more basic form, through Clipdrop), but you can also download the latest version of Stable Diffusion, install it on your own computer, and even train it on your own data. (This is how many services like Lensa’s AI avatars work.)

I’ll dig into what this all means a little later, but for ease of comparison, I’ll mostly be comparing the models as they’re accessed through their official web apps: ChatGPT for DALL·E 3 and DreamStudio for Stable Diffusion.

Stable Diffusion vs. DALL·E 3 at a glance

Stable Diffusion and DALL·E 3 are built using similar technologies, but they differ in a few important ways. Here’s a short summary of things, but read on for the details.

|

Stable Diffusion |

DALL·E 3 |

|

|---|---|---|

|

Official web app |

DreamStudio |

ChatGPT |

|

Quality |

⭐⭐⭐⭐⭐ Exceptional AI-generated images |

⭐⭐⭐⭐⭐ Exceptional AI-generated images |

|

Ease of use |

⭐⭐⭐ Lots of options, but can get complicated |

⭐⭐⭐⭐⭐ Collaborate with a chatbot |

|

Power and control |

⭐⭐⭐⭐⭐ You still have to write a prompt, but you get a lot of control over the generative process |

⭐⭐⭐ You can ask the chatbot to make changes to the whole image or a specific area, but not a whole lot else |

Both make great AI-generated images

Let’s get the big thing out of the way: both Stable Diffusion and DALL·E 3 are capable of producing incredible AI-generated images. I’ve had heaps of fun playing around with both models, and I’ve been shocked by how they’ve nailed certain prompts. I’ve also laughed quite hard at both their mess-ups. Really, neither model is objectively—or even subjectively—better than the other. At least not consistently.

If I was forced to highlight where the models can differ, I’d say that:

-

By default, Stable Diffusion tends toward more photorealistic images, though it can subtly mess up things like faces, while DALL·E 3 makes things that look more abstract or computer-generated.

-

DALL·E 3 feels better “aligned,” so you may see less stereotypical results.

-

DALL·E 3 can sometimes produce better results from shorter prompts than Stable Diffusion does.

Though, again, the results you get really depend on what you ask for—and how much prompt engineering you’re prepared to do.

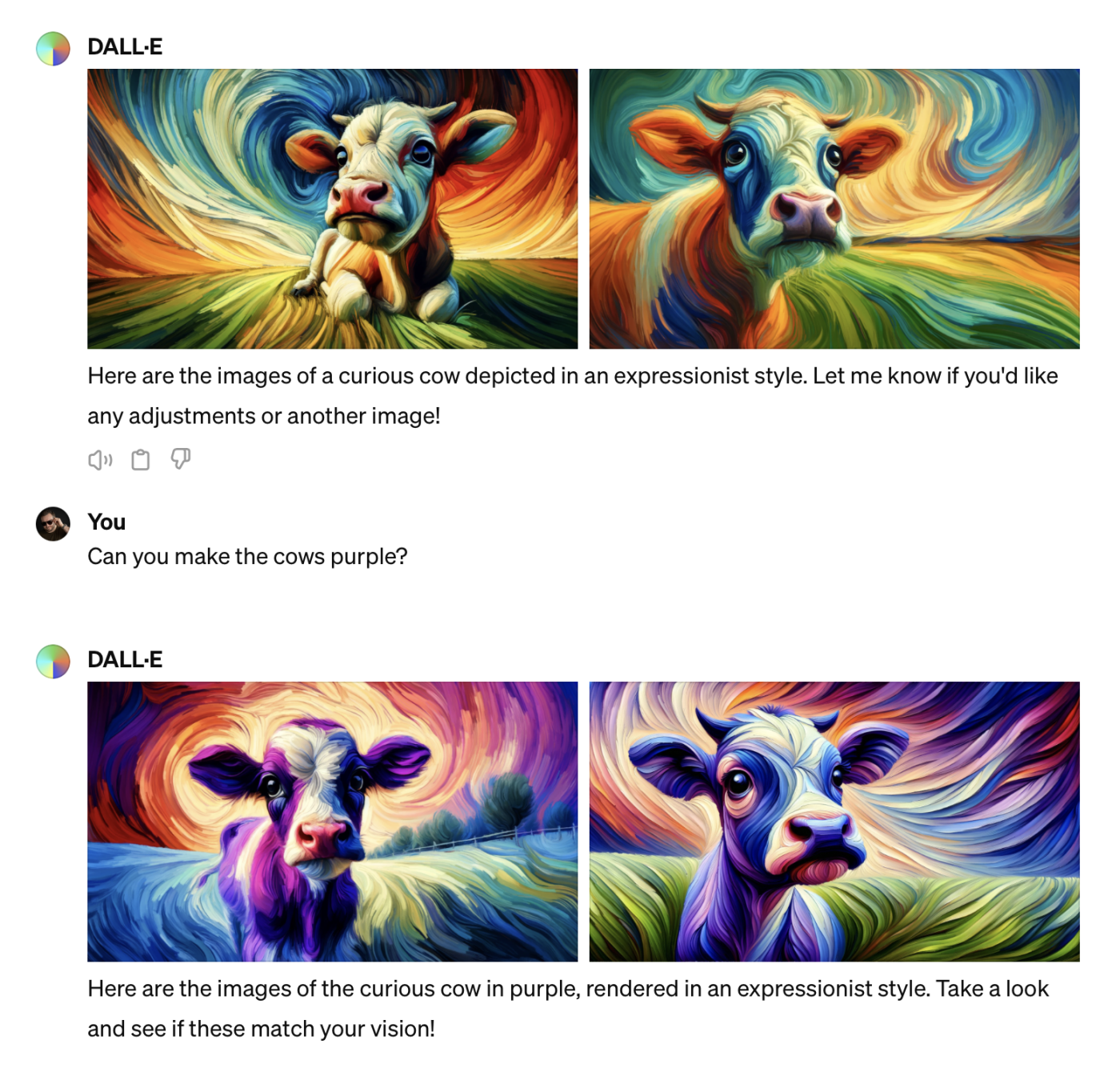

DALL·E 3 is easier to use

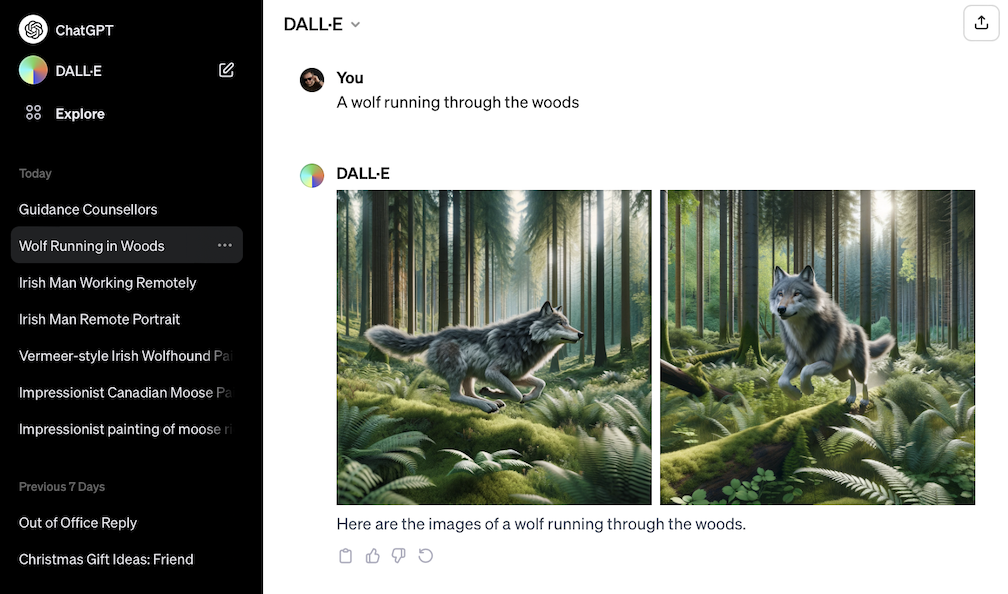

DALL·E 3 is incredibly simple to use. Open up ChatGPT, and so long as you’re a ChatGPT Plus subscriber, you can chat away and make requests. There are even suggestions of different ideas and styles you can try if you need a little inspiration.

If you aren’t a ChatGPT Plus subscriber, you can still check out DALL·E 2, which has more editing options, or try DALL·E 3 through Bing Chat or Microsoft Image Creator. But I’m focusing on using it through ChatGPT here—it’s the most consistent way with the most control.

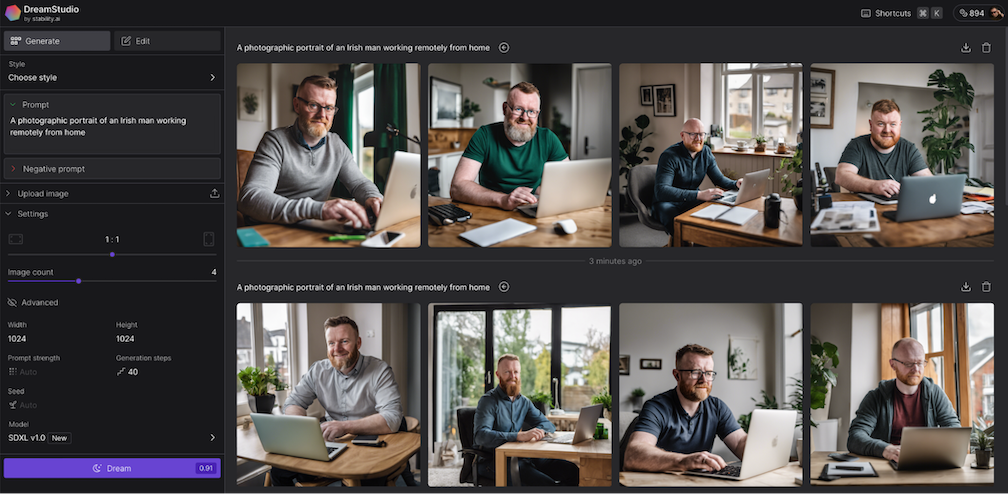

Out of the box, Stable Diffusion is a little less user-friendly. Although you can type a prompt and hit Dream, there are more options here that you can’t help but wonder about.

For example: you can select a style (Enhance, Anime, Photographic, Digital Art, Comic Book, Fantasy Art, Analog Film, Neon Punk, Isometric, Low Poly, Origami, Line Art, Craft Clay, Cinematic, 3D Model, or Pixel Art). There are also two prompt boxes: one for regular prompts and another for negative prompts, the things you don’t want to see in your images. You can even use an image as part of the prompt. And that’s all before you consider the advanced options that allow you to set the prompt strength, the number of generation steps the model takes, what model is used, and even the seed it uses.

Of course, installing and training your own Stable Diffusion instance is an entirely different story—and will require a bit more technical knowledge.

Stable Diffusion is more powerful

For all its ease of use, DALL·E 3 doesn’t give you all that many options. If you don’t like the results, you can ask ChatGPT to try again, and it will tweak your prompt and try again.

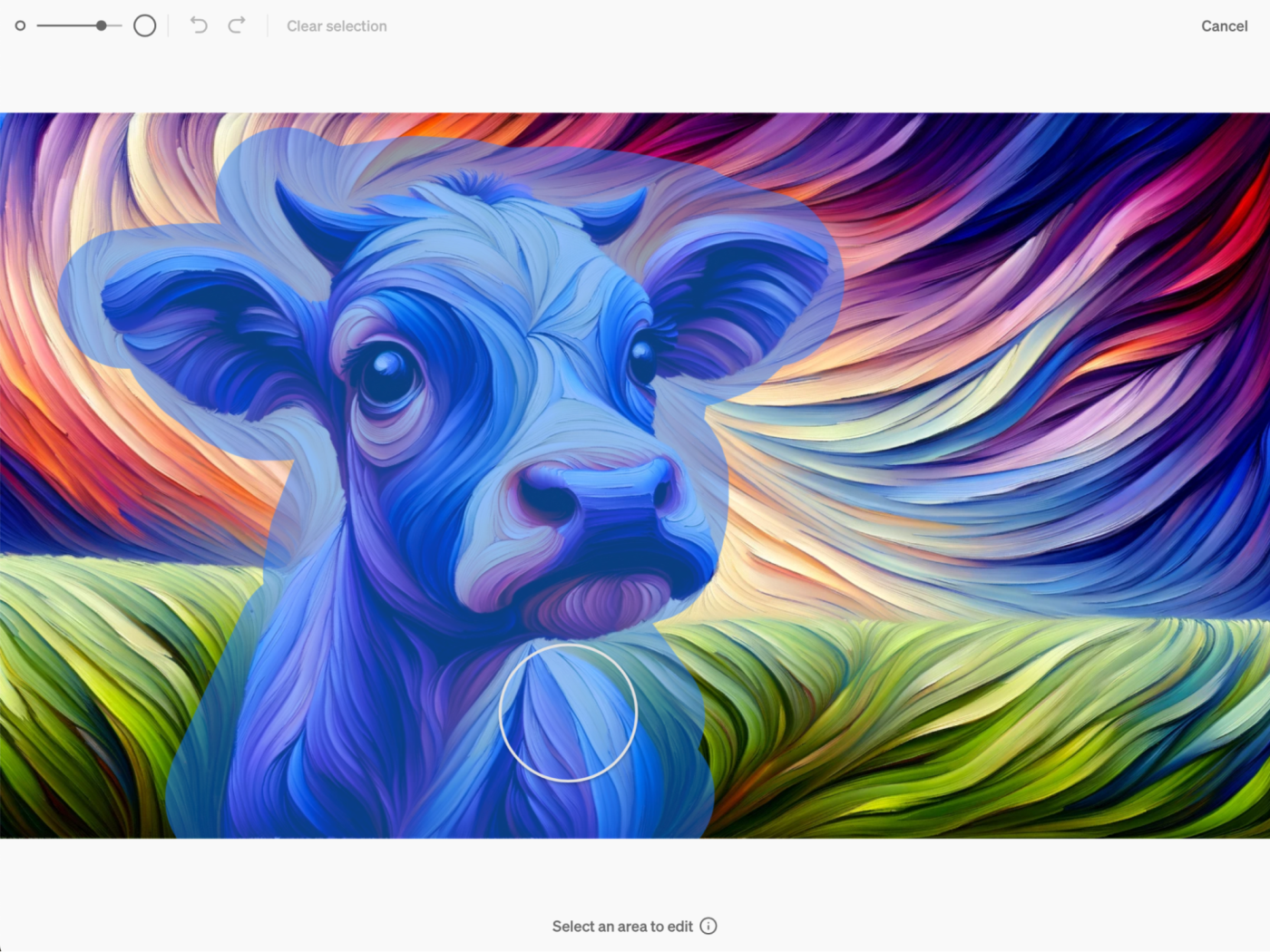

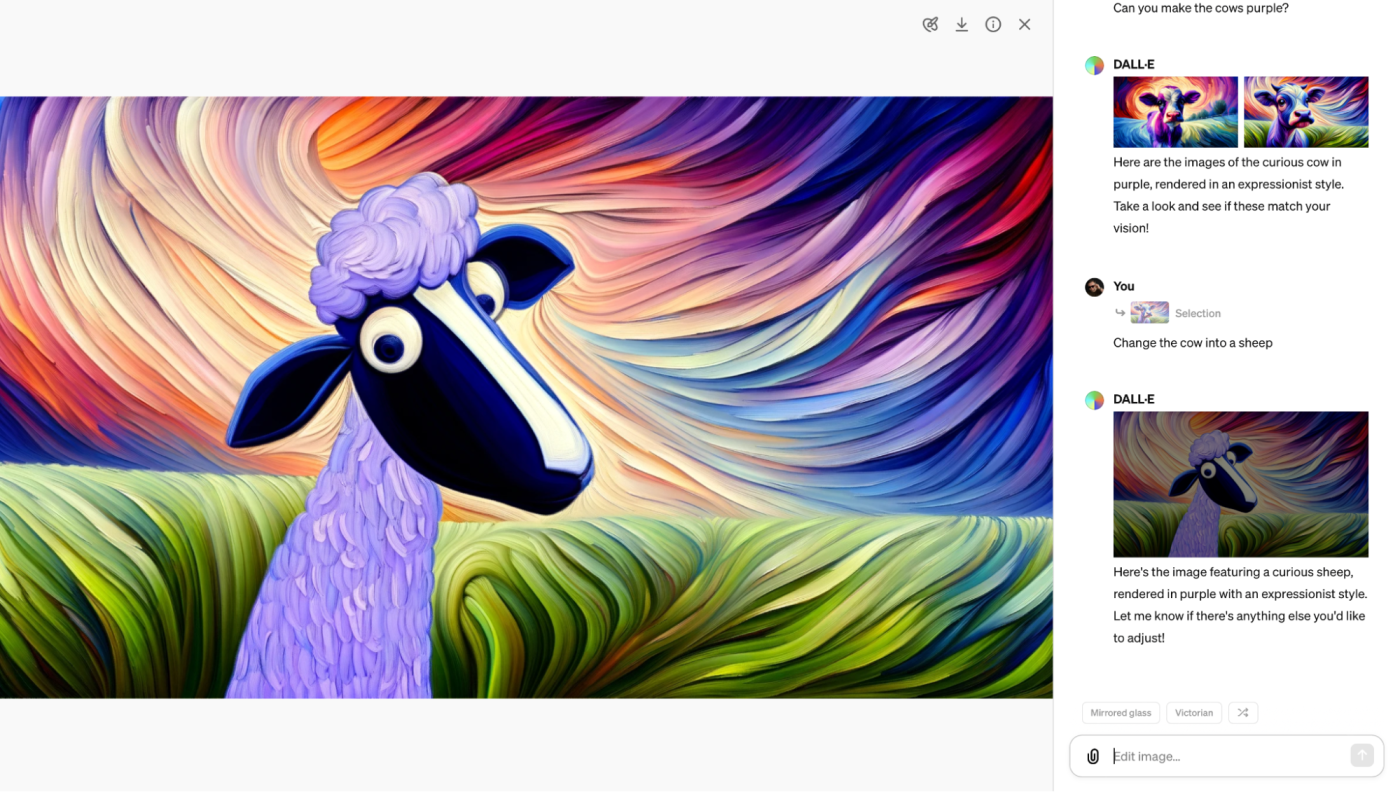

Alternatively, you can use the select tool to highlight the areas of the image you want it to change.

And DALL·E 3 will do its best to incorporate your requests.

These editing features are a lot more powerful than they were when DALL·E 3 first launched, but you still can’t incorporate your own images, expand a generated image, or make big changes without completely changing the image.

Even the Bing tools that use DALL·E 3 don’t give you many more options. The only ones of note are that Image Creator allows you to import your image directly into Microsoft Designer, and Paint allows you to generate images in the app, so you can edit them (or at least paint over the top).

Stable Diffusion (in every iteration except Clipdrop) gives you more options and control. As I mentioned above, you can set the number of steps, the initial seed, and the prompt strength, and you can make a negative prompt—all within the DreamStudio web app.

Finally, if you want to build a generative AI that’s custom-trained on specific data—such as your own face, logos, or anything else—you can do that far more readily with Stable Diffusion. This allows you to create an image generator that consistently produces a particular kind or style of image. The specifics of how you do this are far beyond the scope of this comparison, but the point is that this is something that Stable Diffusion is designed to do that isn’t really possible with DALL·E 3—at least not without diving deep into configuring your own custom GPT, and even then, your options are far more limited .

Pricing isn’t apples to apples

DALL·E 3’s pricing is super simple: it costs $20/month as part of ChatGPT Plus, or it’s available for free as part of different Microsoft tools, though some of them will watermark your images. As of now, DALL·E 3 seems to be limited by the same 40 messages every three hours limit as GPT-4, but that’s still plenty for almost anyone.

Stable Diffusion is free with watermarks on Clipdrop, but on DreamStudio, its pricing is a lot more complicated than DALL·E 3. (And that’s before we even get into downloading Stable Diffusion and running it on your computer or accessing it through some other service that uses a custom-trained model.)

In that case, Stable Diffusion uses a credits system, but it’s nowhere near as neat as one credit, one prompt. Because you have so many options, the price changes with the size, number of steps, and number of images you want to generate. Say you want to generate four 1024×1024 pixel images with the latest model using 50 steps. That would cost 1.01 credits. If you wanted to use just 30 steps, it would only cost 0.8 credits. (You can always see the cost before you press Dream.)

You get 25 free credits when you sign up for DreamStudio, which is enough for ~100 images (or ~25 text prompts) with the default settings. After that, it costs $10 for 1,000 credits. That’s enough for more than 5,000 images or ~1,250 text prompts at the default settings.

So, depending on how many images you want to generate, whether you already pay for ChatGPT Plus, and how much you care about visible watermarks, the best service for you totally changes. DreamStudio offers a lot of bang for your buck and it has a free trial, but there are ways to check out both models for free.

Commercial use is complicated for both

If you’re planning to use Stable Diffusion or DALL·E 3 for commercial use, things get a bit complicated.

Commercial use is currently allowed by both models (although not if you use DALL·E 3 through Microsoft), but the implications haven’t been fully explored. In a ruling in February 2023, the U.S. Copyright Office decided that images created by Midjourney, another generative AI, can’t be copyrighted. This means that anyone may be able to freely take any image you create and use it to do whatever they want—though this hasn’t really been tested.

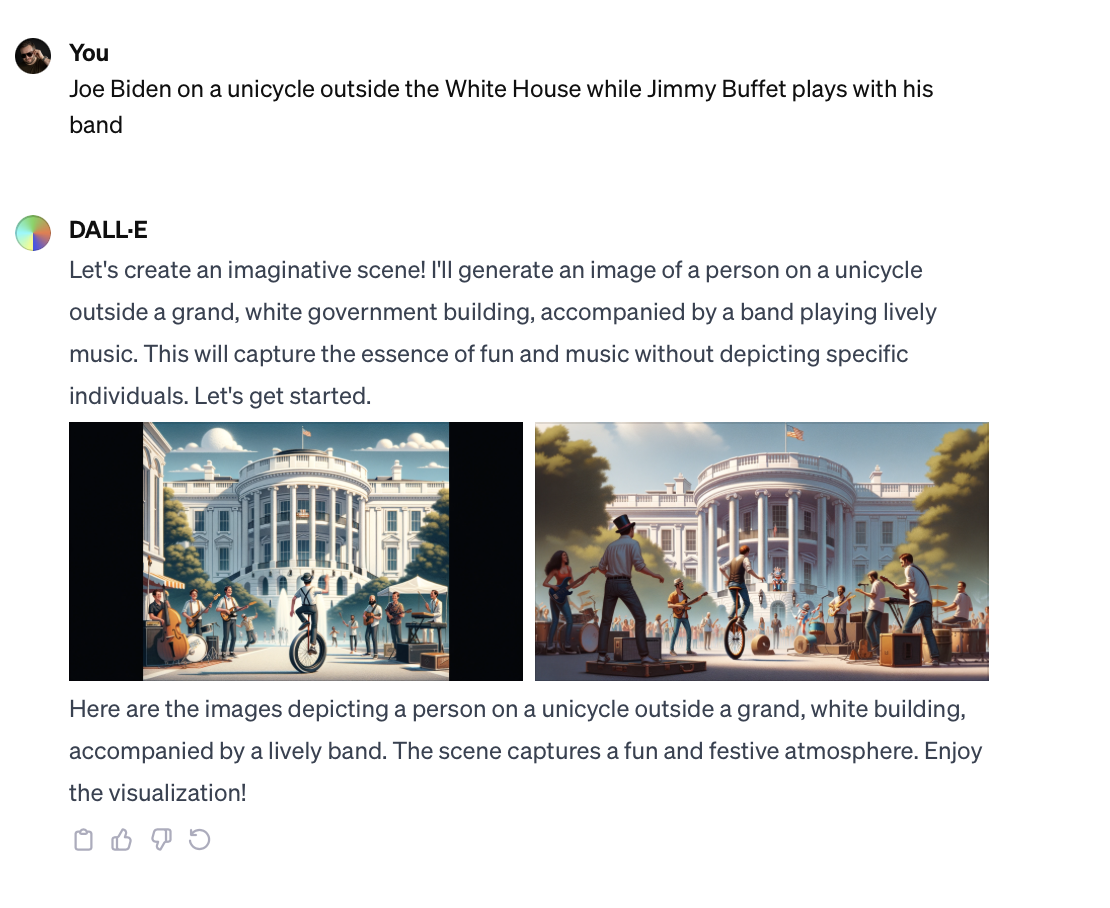

Purely from a license standpoint, Stable Diffusion has a slight edge. Its model has fewer guardrails—and even less if you train one yourself—so you can create more kinds of content. DALL·E 3 won’t allow you to create a huge amount of content, including images of public figures.

DALL·E 3 vs. Stable Diffusion: Which should you use?

While DALL·E 3 is the biggest name in AI image generation, there’s a case to be made for giving Stable Diffusion a go first: DreamStudio has a fully-featured free trial, it’s generally cheaper overall, it’s more powerful, and it has more permissive usage rights. If you go totally off the deep end, you can also use it to develop your own custom generative AI.

But DALL·E 3 is readily available through ChatGPT and Bing, and the $20 you pay for ChatGPT Plus also includes all the other features of ChatGPT Plus—a tool I use at least a few times a week.

Either way, the decision doesn’t really come down to the quality of the generated output but rather the overall user experience and your actual needs. Both models can create awesome, hilarious, and downright bizarre images from the right prompt, so try both, and go with the one you like best.

Related reading:

This article was originally published in May 2023. The most recent update was in April 2024.