My experience working with generative AI image tools has been mixed. Stable Diffusion, Midjourney, and DALL·E are incredible tools for design and rapid ideation. But each one has drawbacks: DALL·E’s output can struggle with photorealism, Midjourney is hampered by its clunky Discord-based user interface, and Stable Diffusion has a bit of a learning curve.

Adobe Firefly—Adobe’s entry into the generative AI space—offers enterprise-friendly features and an impressive level of polish. It has a unique set of advantages: an intuitive user interface, a designer-focused set of features, and a massive, built-in audience. While Adobe’s decision to launch Firefly was unquestionably a mainstream moment for generative AI, one question remains: is it any good?

I took it for a spin, and in this article, I’ll walk you through what makes Adobe Firefly different from other AI tools, highlight its capabilities, and help you decide if you should consider using Firefly over the slew of other art generators out there.

What makes Adobe Firefly different?

The leading generative AI tools aren’t keen to reveal precisely how they train their models. But it’s an open secret that, as Midjourney founder David Holz said in a September 2022 interview, most of them built their datasets with “a big scrape of the internet” without seeking consent from copyright holders. The result? Artists are suing over alleged copyright infringement.

Adobe hopes to avoid that fate. Unlike most AI tools, Firefly was trained using only licensed images and public domain content. This makes it a brand-safe option for corporations and commercial design work. Adobe also tags the images it generates with Content Authenticity Initiative metadata, which lets people know the image was generated with AI and provides attribution for content used in the image’s creation. To further boost its appeal to big brands, Adobe’s Firefly Services allows enterprises to train custom AI models based on their intellectual property, making it easier to scale campaigns without sacrificing brand consistency. IBM’s consulting division now uses Firefly’s API to automate workflows for its 1,600 designers.

Apart from its copyright-friendly (and arguably more ethical) approach, Adobe’s key advantage is its massive existing foothold in the design industry. Over 90% of creative professionals use Adobe Photoshop. And indeed, in the first seven months after Firefly’s release in March 2023, users generated more than three billion images.

Adobe has already rolled out Firefly to millions of Photoshop, Illustrator, InDesign, and Adobe Express users and will eventually offer generative audio and video AI features in popular products like Premiere Pro. With features like generative recolor, text effects, and sketch to image, Adobe is catering Firefly to the needs of professional designers—while keeping it accessible to everyone.

What can Adobe Firefly do?

Text-to-image

Text-to-image is the bread and butter of any generative AI image tool—and Firefly nails it. It’s also got advantages over other tools, particularly when it comes to the user interface. (You can access text-to-image via Adobe Firefly’s web app and within products like Adobe Express and Adobe Stock; in the following examples, I’m using the web app.)

Here’s an example of what Adobe Firefly can do with the prompt “a woman staring through a cafe window with her reflection in it, close-up, looking off into the distance.“

This composition style is popular in the world of premium stock photos. Adobe Firefly, which pulled much of its training data from Adobe’s stock library, executes it at a level that’s at least as polished as Midjourney, Stable Diffusion, and DALL·E.

This is particularly true since the release of Firefly’s upgraded Image 2 model in October 2023. The first version of Firefly’s text-to-image model—like other early AI art tools—generated odd image artifacts. In particular, it struggled with creating realistic human hands. Firefly’s Image 2 model rarely suffers from those issues, and it takes an impressive leap forward in output quality.

Even more than its output quality, though, Firefly’s advantage is its user interface. It has the best UI I’ve seen in this space—hands down.

When you first click the text-to-image button, you’re presented with a “wall of inspiration” that shows you what’s possible with different prompts and styles, from claymation-style dinosaurs to photorealistic landscapes.

After clicking on an image, you can tweak the prompt and settings until you get what you want. If you’re struggling to get an output you like, just go back to the wall of examples for visual inspiration. Or, if you already know what you want, you can type in the prompt directly.

My problem with the existing crop of generative AI image tools is that they tend to be overly technical. You have to start from scratch with a prompt that describes not only the content of your image, but also the technical parameters of your output: elements like style, aspect ratio, content type, lighting, composition, and color and tone.

Refreshingly, Firefly has built-in settings for all of this. You can use your prompt to describe the content of your image and refine your settings later. This workflow is more efficient, and has a gentler learning curve than anything else I’ve seen.

You can also drag and drop a reference image into Firefly’s interface to get the precise look you want. This is perfect for keeping images consistent with your brand—and much more efficient than trying to use text to describe an image’s style.

Structure reference, one of Firefly’s newer text-to-image features, lets you experiment with a range of styles while keeping the “blueprint” of the scene intact. After uploading a photo of my living room, I added instructions via Firefly’s text prompt to reimagine it in the style of the 1970s. (Groovy!)

Generative fill

Generative fill is available in the web version of Firefly and is also integrated into Adobe Photoshop and Adobe Express. As a 20-year veteran user of Photoshop, it’s the kind of feature I always wished I’d had: a way to highlight something I don’t want and cleanly replace it with something I do want.

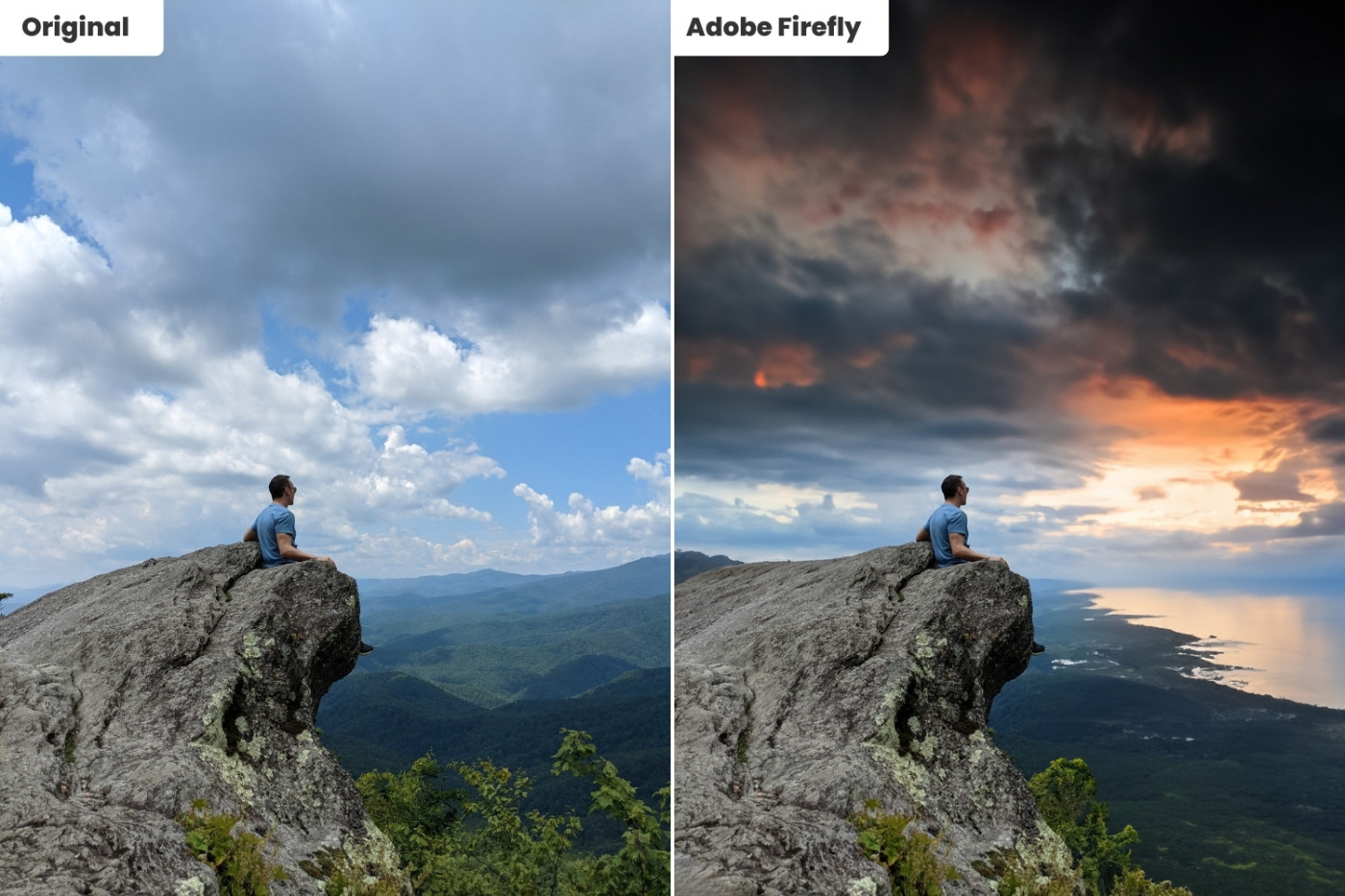

To test this feature, I uploaded a photo of myself looking out over the Blue Ridge Mountains in North Carolina. Then, I selected the entire background and instructed Adobe Firefly to change it to “watching the sunset over the ocean.”

Cool, right? But if your experience is like mine, you won’t get results like this on your first try. Here’s the secret to using this tool: highlight broad swaths of your image, then give Firefly as much creative liberty as you can.

Theoretically, you should be able to use generative fill to superimpose new objects onto your photo. Unfortunately, it doesn’t seem like the benefits of Firefly’s Image 2 model have made their way to the generative fill feature yet: when I asked Firefly to generate objects like eagles, blimps, or airplanes in the sky, it created comically fake-looking outputs. Generative fill also struggles mightily with anything related to humans—except removing them from your photo. That said, when you let it do what it’s best at, the results are impressive, and it’s a blast to play around with.

Text effects

Many of the features in Adobe Firefly’s arsenal are highly specific to the needs of designers rather than casual users. Text effects—which is now integrated directly into Adobe Express—is one of them. This typography tool lets you turn text into stunning artwork using prompts.

I entered the word “jungle” and, for my prompt, instructed Firefly to fill the text with “jungle vine and animals.”

You can also use text effects to add textures. Here’s what the prompt “dripping honey” looks like.

For designers, this is an immense time-saver. It also opens the door to a new world of creative branding possibilities. The only drawback: as of now, there are only a handful of fonts available.

Generative recolor

Generative recolor is another Adobe Firefly feature tailor-made for designers. Vector files can be expanded without losing quality at high resolutions, so they’re often used by designers for commercial print work and are especially critical for large assets like billboards, signs, and vehicle wraps.

Now integrated into Adobe Illustrator, vector recoloring allows you to experiment with different color combinations quickly. Using one of Firefly’s stock vector images, I used the recoloring prompt “80s style bold” and got a suitably Fresh Prince of Bel-Air-esque output.

Text to template

Firefly’s Text to template feature, available within Adobe Express, uses AI to generate unique templates for print and social media assets, like posters, flyers, and Instagram and Facebook posts. It draws on Adobe’s AI engine as well as its vast library of stock images.

To test this feature, I generated templates for an imaginary National Taco Day campaign. Text to template is a good starting point for the ideation phase of this sort of campaign: it gives you a wide range of designs to break through designer’s block. But in my experience, the AI-generated templates require quite a bit of customization. The main issue isn’t the text or images but the color palettes and designs, which are often low-contrast and hard to read. (Since this feature is still in beta, I expect to see it improve over time).

Firefly vs. DALL·E 3, Midjourney, and Stable Diffusion

I ran a head-to-head comparison of Adobe Firefly’s Image 2 Model against three other popular generative AI tools: DALL·E 3, Midjourney v5.2, and Stable Diffusion XL v1.0.

For each tool, I used the same prompt: “A close-up portrait of an elderly man outside in the evening, realistic, photographic look.”

Adobe Firefly’s new Image 2 model has successfully overcome the “posed stock photo” quality that was common in the earlier version of Adobe’s model. Whereas Stable Diffusion and Midjourney produced more polished outputs than Adobe Firefly in prior versions, Adobe’s new model has closed the gap—as has DALL·E, with the release of DALL·E 3.

I’ve seen each of these leading apps outdo one another in certain instances. But with sophisticated prompting, it’s clear that Adobe Firefly can run with the big kids.

Firefly’s roadmap for generative audio and video

Adobe has rolled out significant updates to Firefly since its inception, but one major category of AI features has yet to be launched: audio and video.

Premiere Pro, Adobe’s popular video editing tool, was used to edit nearly two-thirds of the films shown at the 2023 Sundance Film Festival. Along with other Adobe multimedia tools like Adobe Audition and Adobe After Effects, Premiere Pro is next in line for the Firefly generative AI treatment.

While the final set of features has yet to be announced, Adobe has hinted that users will be able to use text-based editing to:

-

Generate and overlay subtitles

-

Create music and sound effects

-

Adjust video coloring and brightness

-

Add animated text effects

-

Adjust videos using generative fill

-

Source and sequence relevant B-roll footage

-

Generate storyboards and shot details

Video editing is notoriously tedious. According to one editor, editing a five-minute video requires at least 10 hours of editing time; Ri-Karlo Handy, an L.A.-based film editor and producer, says he once edited the same scene over 40 different ways. The upshot? Generative AI for video, audio, and animation has substantial time-saving potential.

Ready to experiment with Adobe Firefly?

Mark Zuckerberg once described his early approach at Facebook as “move fast and break things.” That’s the approach most generative AI tools are taking today—they’d rather ask for forgiveness than permission.

Adobe Firefly is taking the opposite approach. Rather than ignoring artists’ worries about attribution and brands’ concerns about copyright and liability, Adobe has designed a tool catered to the needs of both. Given the quality of Firefly’s output, its ease of use, its ethical design, and the fact that its features are rolling out to Adobe’s built-in audience of millions of users, it’s a worthy addition to the generative AI space.

If you’ve already got an Adobe subscription, you can access Firefly’s features today in products like Photoshop, Illustrator, and Adobe Express. Otherwise, sign up for the web version of Adobe Firefly to try it for yourself.

Related reading:

This article was originally published in June 2023. The most recent update was in April 2024.