Like many people early in their careers, one of my first office jobs involved pulling together company data for a lead database. It was painfully manual, involving lots of scrolling, copying, and pasting (plus a fair dose of distraction—I remember sneaking peeks at a PDF of Harry Potter on another browser tab). But today’s interns can rest a little easier: there’s now a slew of tools that can automate this process.

Browse AI, a no-code data scraping tool, is one of them. Whether you’re building a lead database, scraping data for a software product, or hacking together a time-saving solution for internal use, Browse AI lets you deploy robots to extract data on your behalf.

To test out the latest in no-code data scraping technology, I used Browse AI to create my own data scraping robot. Here’s what I found.

How Browse AI works

Before we go too far, here’s a quick primer on scraping data from the web, why you might want to do that, and how Browse AI streamlines the process.

You might be familiar with APIs (application programming interfaces), which allow software applications to talk to each other and exchange data behind the scenes. APIs are the backbone of many of your favorite online products and services, from weather forecasting sites to travel booking engines.

But APIs don’t include every piece of data you might want—and some websites don’t offer APIs at all. That’s where so-called “scrapers” come in, which periodically scan websites and extract information. People use data scraping tools for things like:

-

Monitoring price changes and sales on sites like Amazon and Best Buy

-

Pulling real estate listings data from sites like Zillow and Redfin

-

Importing the latest reviews from sites like Yelp and Booking.com

Browse AI offers something it calls “prebuilt robots” for all of the tasks above, allowing you to set them up with just a couple of clicks. But you can also train a custom robot to scrape data from any website, or even create a chain of robots working together to complete data collection tasks on your behalf.

Browse AI pricing: Browse AI’s plans start at $48.75/month for 2,000 credits (equivalent to extracting around 20,000 rows of data). To see how automated data scraping can boost your workflow, sign up for a free Browse AI account and get 50 credits/month.

Putting Browse AI to the test

I’ve never built a web scraping robot before, so I’ll be walking you through Browse AI’s capabilities as a relative beginner. These are the Browse AI features we’ll be looking at:

Basic scraping

Let’s start with Browse AI’s most straightforward capability: scraping data from a single page. To do this, you can use a prebuilt robot or train a custom robot.

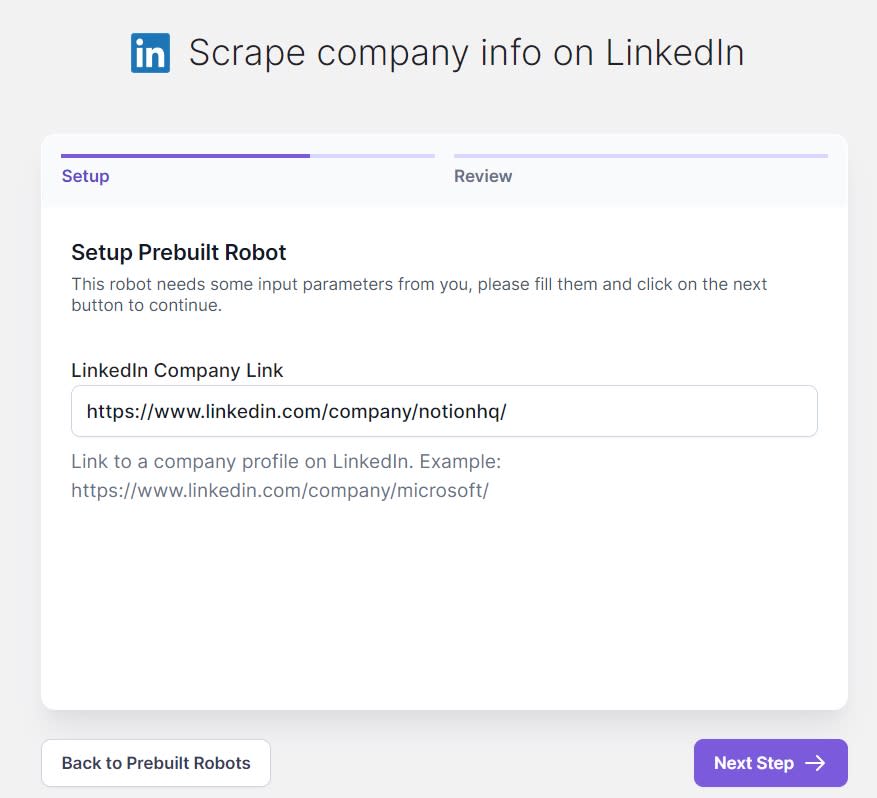

To demonstrate how this works, let’s imagine I’m building a lead prospecting list and want to pull company information from LinkedIn. Browse AI offers quite a few prebuilt robots for this purpose that scrape popular websites like LinkedIn, Clutch, and ZoomInfo. I tested out a prebuilt robot titled Scrape company info on LinkedIn.

To get started, you need a LinkedIn URL for your target company.

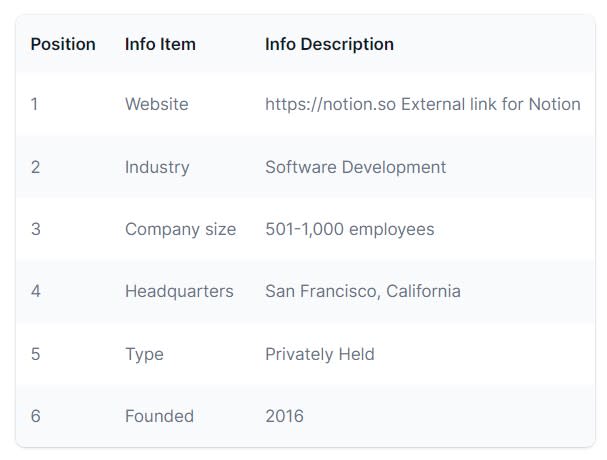

After Browse AI works its magic, it’ll pull the relevant data into its system.

Scraping data from a single web page is the simplest way to use Browse AI, but it’s not much more productive than going to that page and copying and pasting the information yourself. For the real efficiency gains, you’ll want to use the bulk scraping feature.

Bulk scraping

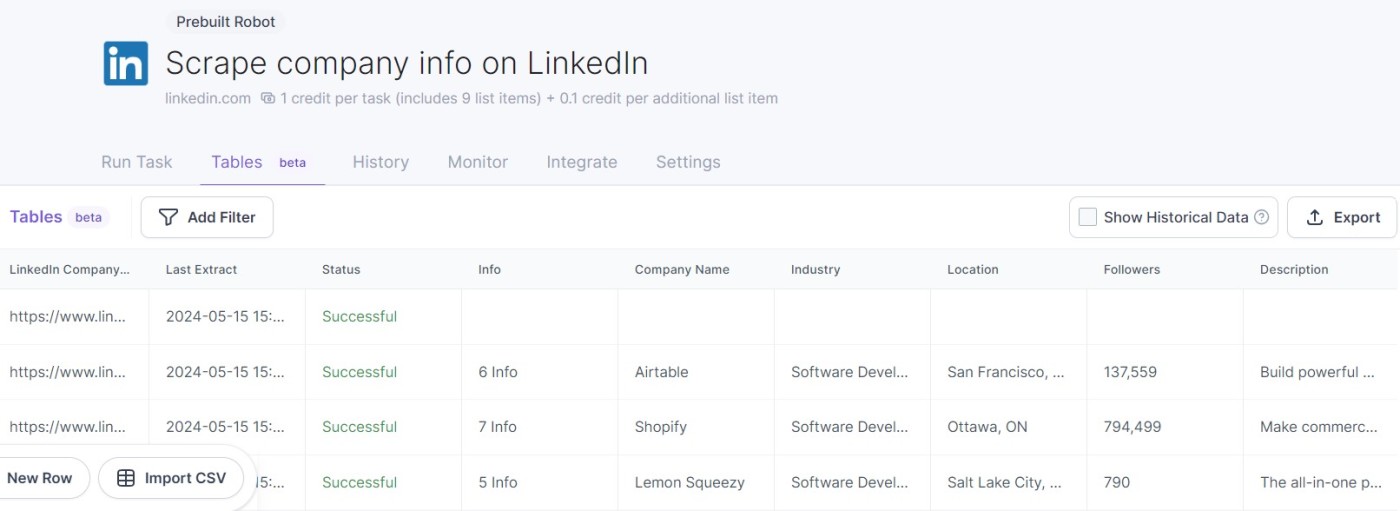

You can use Browse AI to automate web scraping for as many as 50,000 items. It’s nearly as easy as scraping a single item, except that instead of using Browse AI’s web interface to start the process, you’ll need to upload a CSV.

Note that scraping doesn’t happen at lightning-quick speeds. Pulling data from LinkedIn took me about a minute and a half per task. You may also find that some tasks time out, which happened to me. (You’ll just need to rerun the tasks that failed when this happens.) Still, depending on the complexity of the task, Browse AI can bulk scrape hundreds or thousands of items of data per day while running in the background.

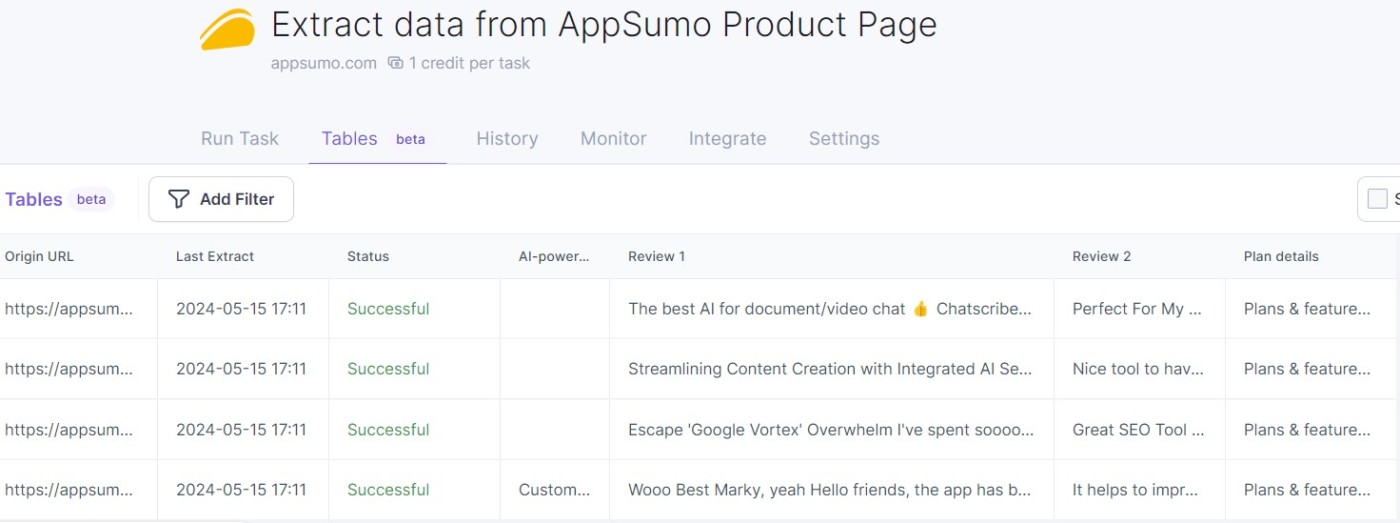

You can see your results in Browse AI’s Tables tab, or click Export to send the information to a CSV.

Monitoring

Snooping around on your competitors’ websites is a smart way to stay competitive. This is one of many use cases for Browse AI’s monitoring robots: you can set them up to regularly scan data on other websites and notify you of changes.

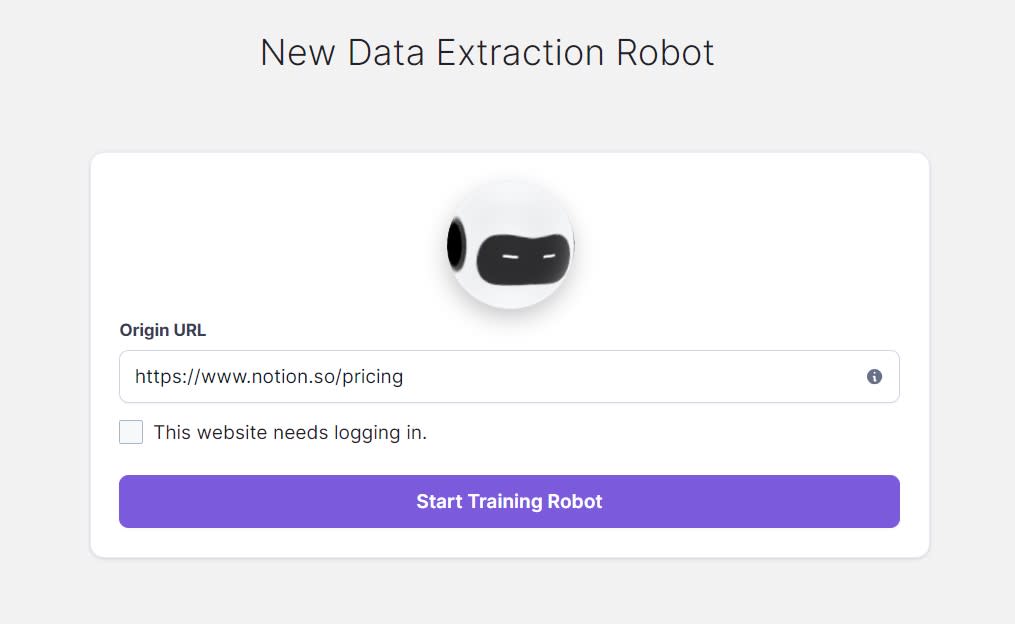

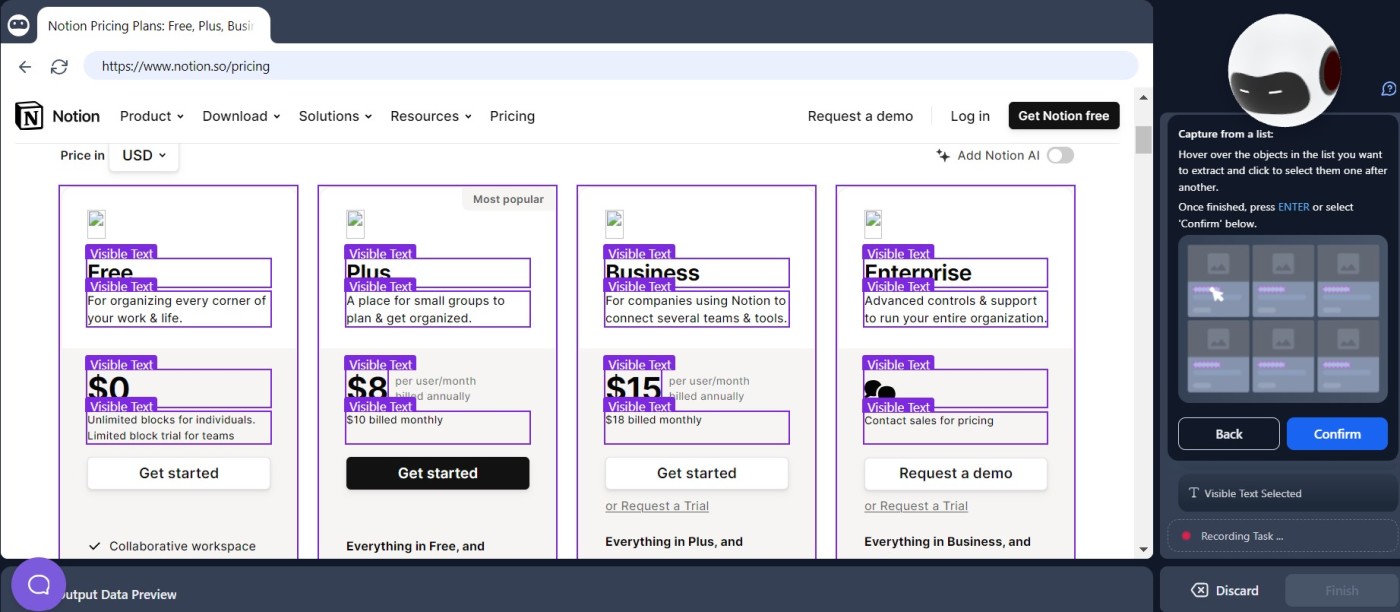

Browse AI offers prebuilt robots to help you monitor pricing and product details on retail sites like Amazon. For the sake of this example, though, I’m interested in grabbing pricing from SaaS websites. That requires training a data extraction robot using Browse AI’s “custom robot” feature.

Fortunately, training a robot is easier than it sounds. To start, you specify the URL you want to monitor.

Then, you need to show the robot precisely which data you want to extract. You can use a Chrome extension or Browse AI’s virtual browser—called Robot Studio—which allows you to interact with a webpage, select the information you want to extract, and import it into a spreadsheet.

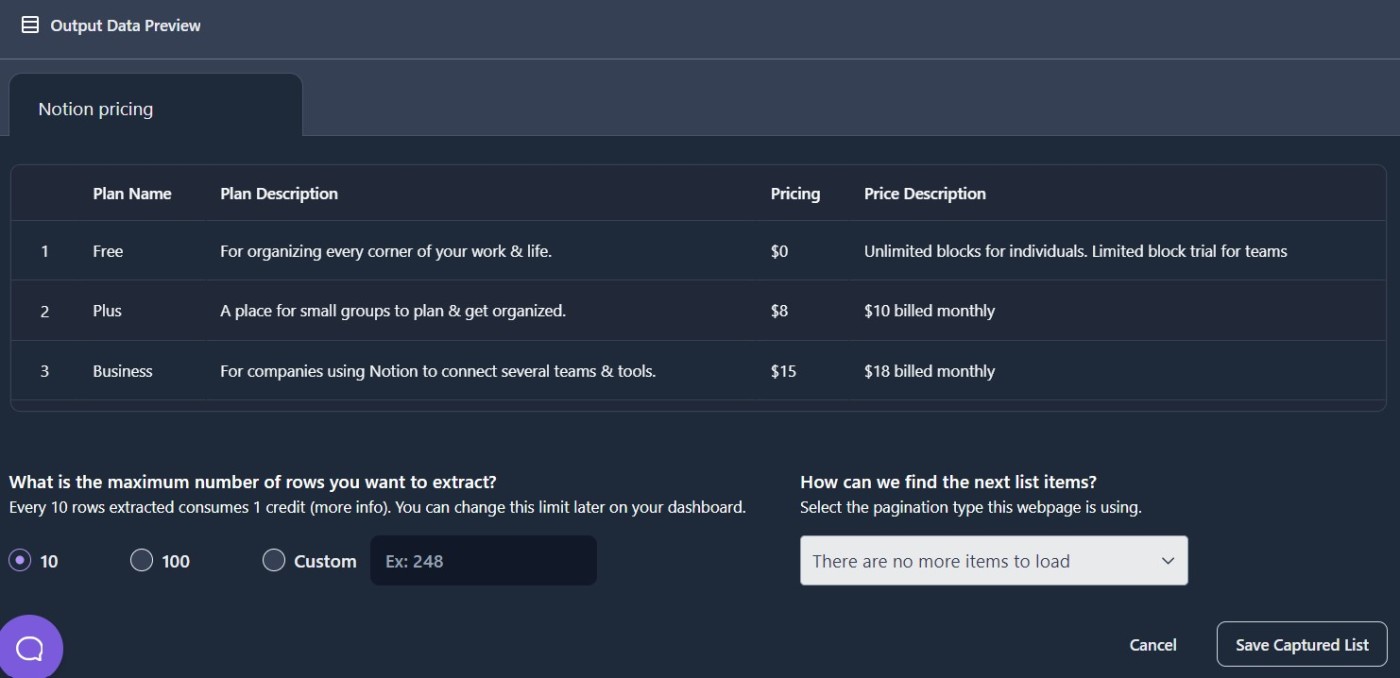

Each of your actions is recorded, so the robot knows when to scroll, when to click, and what data to extract. After selecting your target data, Browse AI will ask you to name each field, so the information can be organized in a table.

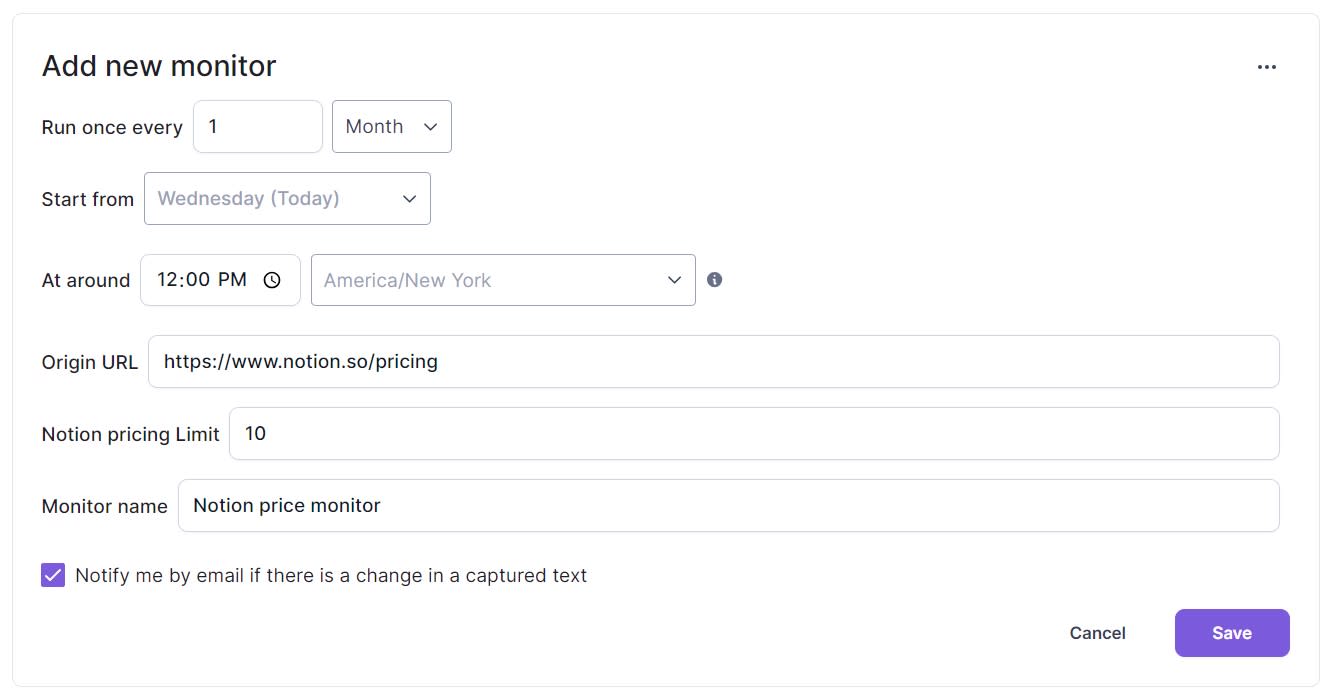

All that’s left is to set up the frequency of monitoring. In the example below, I’m asking the robot to run once per month to check for any pricing changes.

Workflows

Here’s where it gets really interesting: using Browse AI’s workflows, you can string multiple robots together, with the second robot taking action on data that the first robot uncovers. For example, your first robot might pull a list of company names and URLs, and your second robot can travel to those URLs to gather even more details. (This process is also known as “deep scraping.”)

Since Browse AI’s robots need to be trained to know what data to extract, running deep scraping in bulk only works if each of the pages you’re scraping is formatted the same way. (Typically, that means you’ll need to stick to scanning subpages of the same website). That limits the use cases somewhat, but this feature still opens the door to time-saving features.

For example, let’s say I want to maintain a list of the new marketing and sales SaaS products added to AppSumo, along with details from each product page. This requires two robots:

-

Robot A: Scans a category-level page to extract the latest products and product URLs.

-

Robot B: Follows the URLs uncovered by Robot A to extract more detailed information from product-level pages.

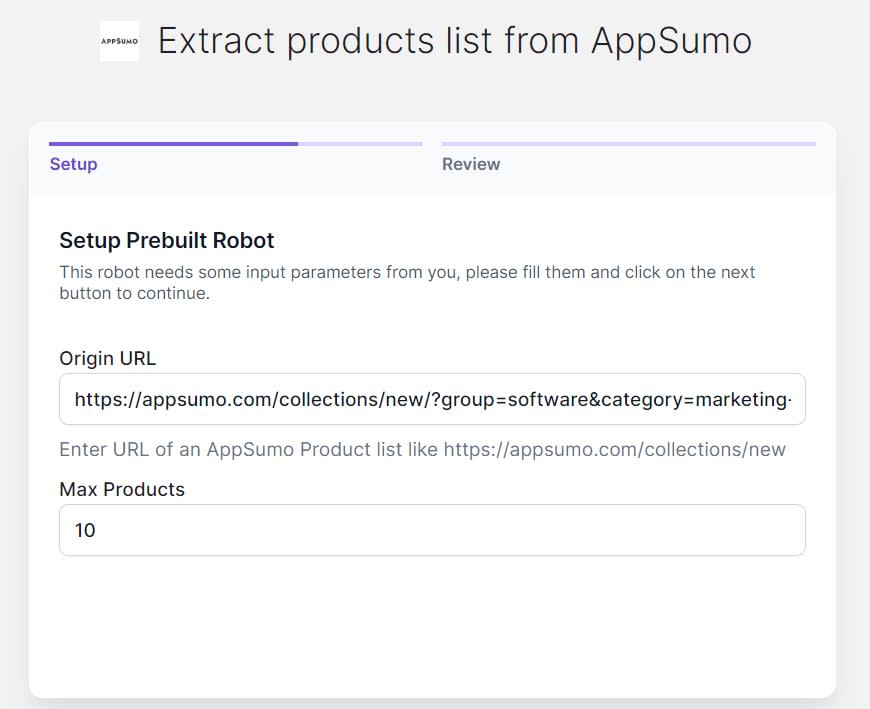

Browse AI actually has a prebuilt AppSumo robot that I can use for Robot A. All I need to do is figure out the new products category I want to scan, copy the URL, and paste it in.

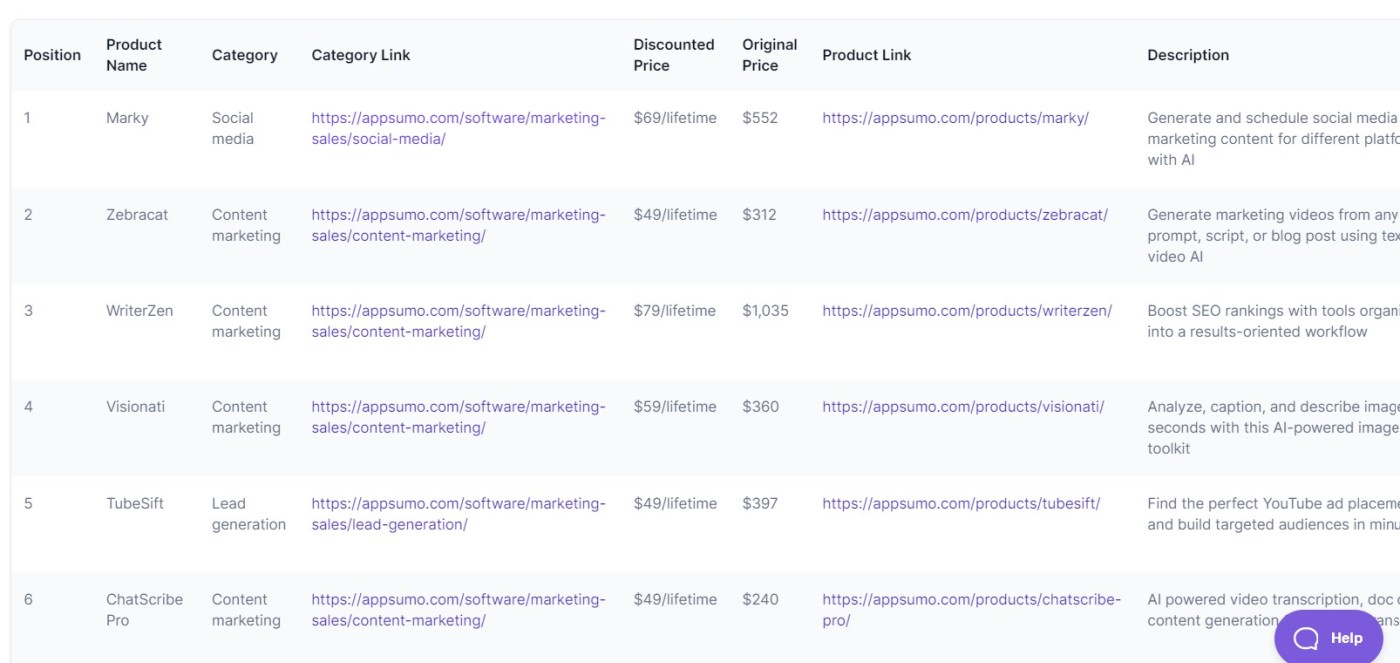

Boom! Now—courtesy of Robot A’s scraping—I have a list of the latest marketing- and sales-related SaaS products from AppSumo, along with high-level details and links to the product page for each.

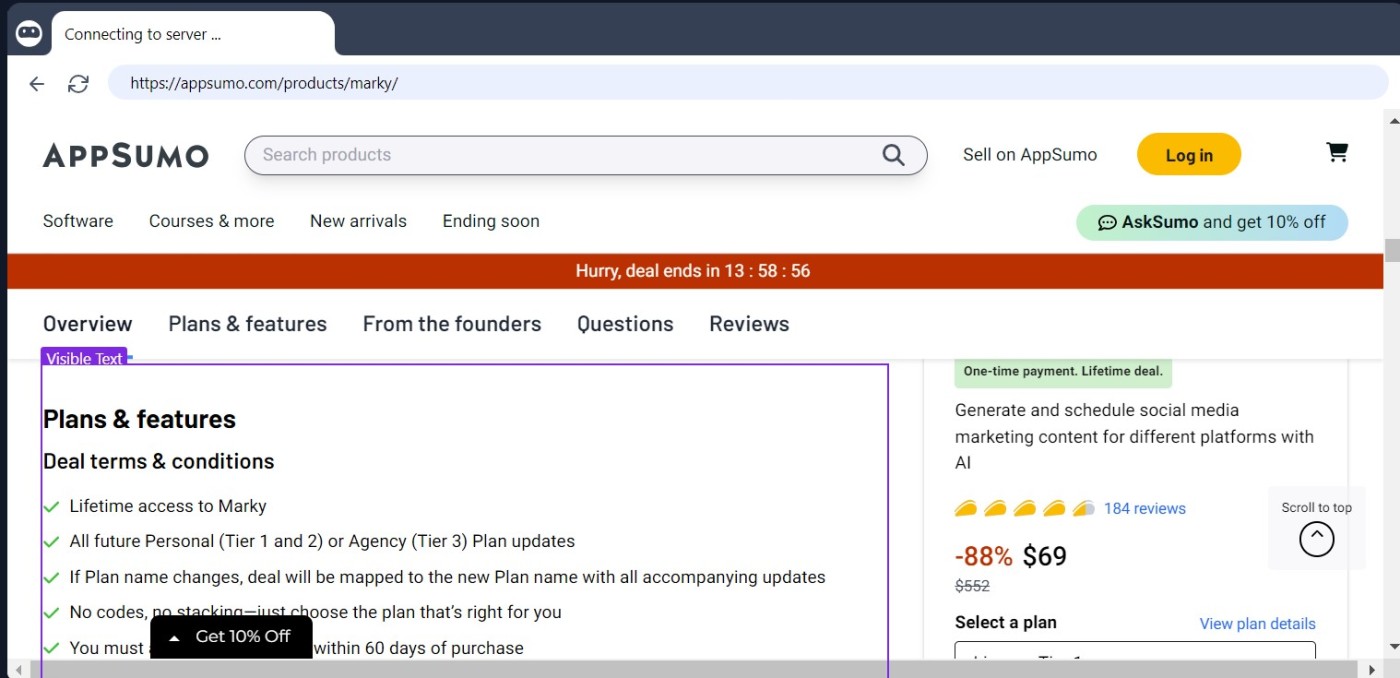

This includes most of the essential data I’d want. But when picking software, I usually look through the reviews, too, along with detailed information about the product’s features. So for a more complete picture, I can use Robot Studio to train Robot B to navigate to each product page and extract a few reviews along with more product detail.

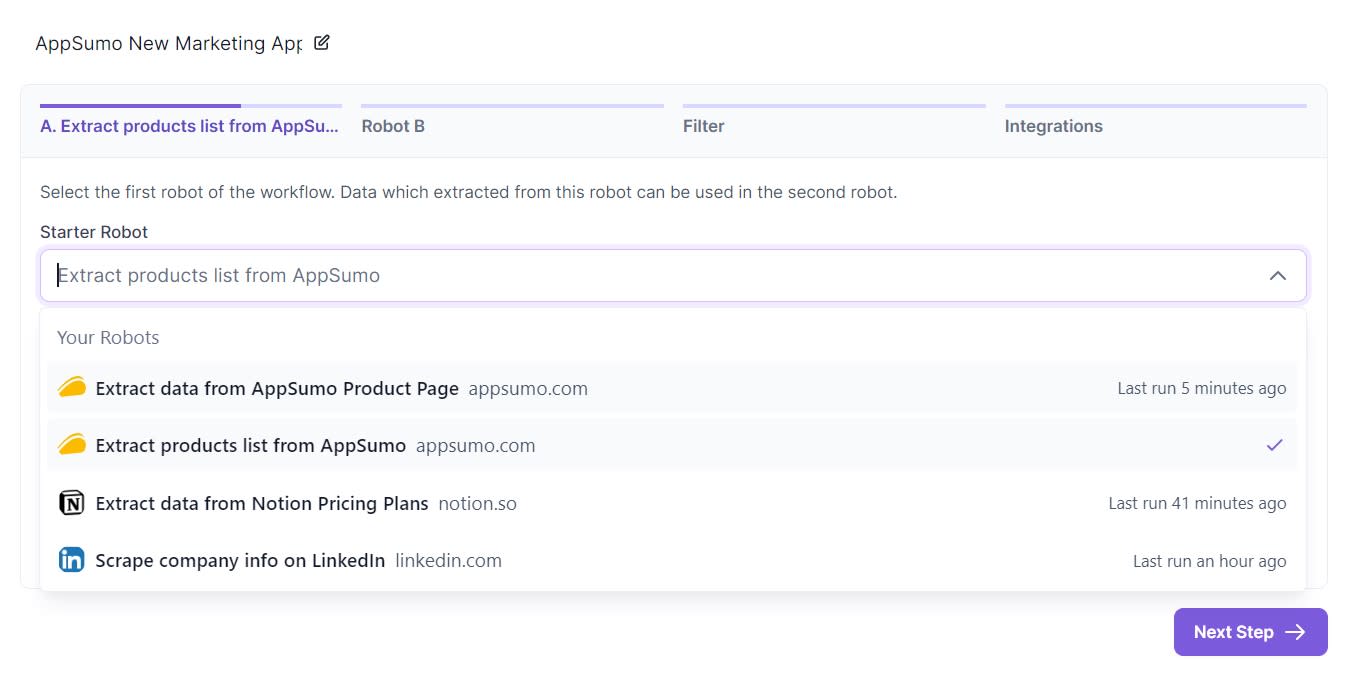

Then, I can use the Workflows feature to string these two robots together and save time by running them in bulk.

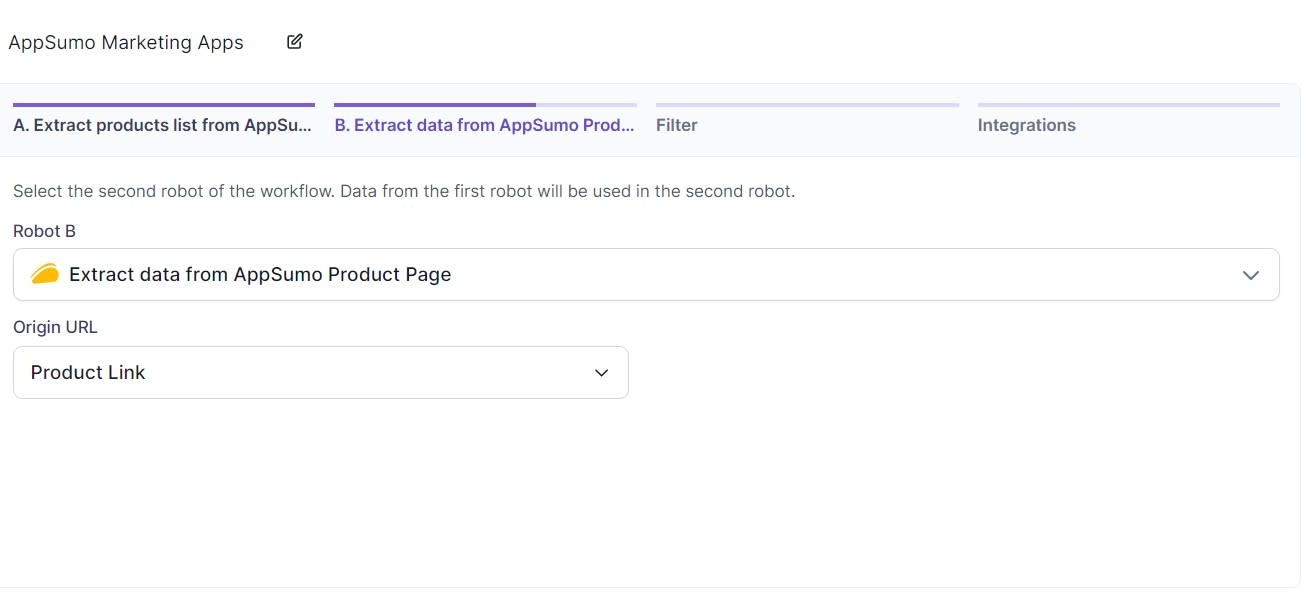

From there, it’s just a matter of telling Robot B to take action based on the URLs uncovered by Robot A.

Now, every time Robot A runs, Robot B runs after it to collect more data.

While Browse AI maintains all of this scraped data within its app, the data for each robot is stored separately, so it can get a little unwieldy when stringing together multiple robots. Instead, you can use Browse AI’s Google Sheets or Airtable integration to map data from each robot into a single sheet.

Create your own data scraping robot

Data scraping isn’t a job for interns anymore. Today, robots can handle more than you might think—and you don’t have to be a coding wiz to set them up. Outsourcing to one of Browse AI’s robots means more reliable data collection, freeing you up for other activities.

Once you’ve collected your scraped data, you can use Zapier to automate Browse AI even further by connecting it with thousands of other apps. For example, you can use Browse AI to gather data, then use Zapier to automatically update that information in all the other apps you use. Learn more about how to automate Browse AI, or get started with one of these pre-made workflows.

Zapier is the leader in workflow automation—integrating with 6,000+ apps from partners like Google, Salesforce, and Microsoft. Use interfaces, data tables, and logic to build secure, automated systems for your business-critical workflows across your organization’s technology stack. Learn more.