While working on categorical data in machine learning, dummy variables are often used (also called one-hot encoding). This helps to convert categorical values into numerical form. However, there is a common issue that arises when all the categories don’t appear in the dataset. This leads to missing columns in the test data, which leads to errors in model predictions.

In this blog, we will be exploring dummy variables and the challenges which occur when all the categories are not present in the dataset. We will also discuss why missing categories can cause problems, and how to handle those cases effectively, and the best practices which ensure consistency in the machine learning models. So let’s get started!

Table of Contents

What are Dummy Variables?

Machine learning models work with numerical data. However, real-world datasets usually contain categorical data (e.g., “Blue”, “Red”, and “Green” for colors or “Male”, or “Female” for gender). Dummy variable is a method to represent the categorical values as numbers. Here, each category has its own binary column (0 or 1). This makes the data readable to the machine without assigning any arbitrary numerical values that might mislead the model.

For example, let’s consider a “Color” column with three categories.

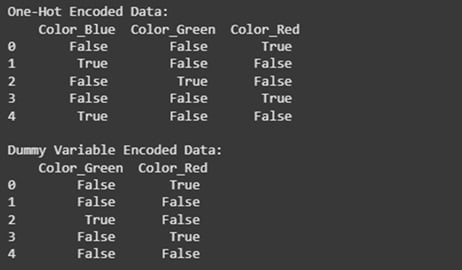

Now, we will use one-hot encoding to create dummy variables.

Now, these numerical values can be used in machine learning models.

Problem: Missing Categories in Data

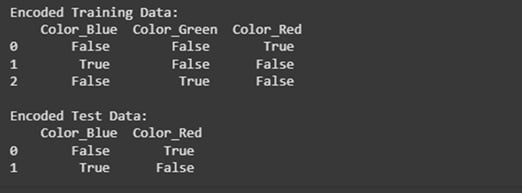

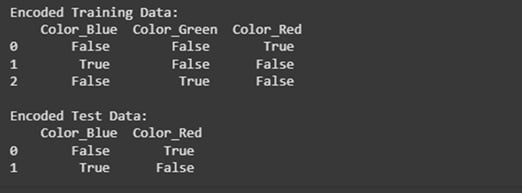

Imagine you are training a model using this training dataset.

But in the test dataset, there are only two categories.

Now, if one-hot encoding is applied, the “Blue” column will be missing from the test dataset.

Training Data (One-Hot Encoded)

Test Data (One-Hot Encoded)

As you can see in the test data, the “Blue” column is missing. To fix this issue, ensure that both the train and test data have the same dummy variables.

How to Handle Missing Dummy Variables?

Below are some of the methods given for the handling of Dummy variables.

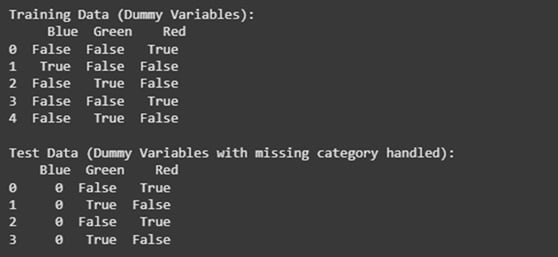

Method 1: Using get_dummies() with reindex()

The get_dummies() function in pandas helps to create dummy variables. However, you can use reindex() which ensures that all the expected columns are present.

Example:

Output:

Explanation:

- The construction of dummy variables happens through the pd.get_dummies() command within the above code block.

- The .reindex() method added all missing categories by using train_dummies.columns and fill_value=0 parameter.

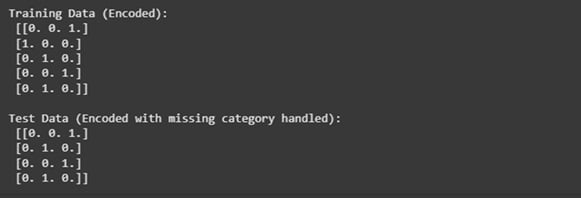

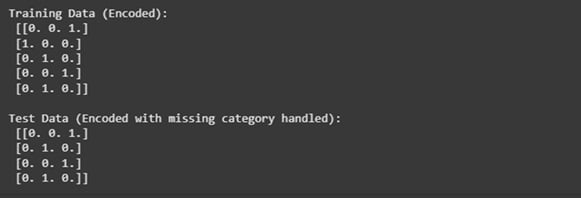

Method 2: Using OneHotEncoder from Scikit-Learn

When dealing with missing values one should utilize the OneHotEncoder tool from Scikit-Learn. This encoding technique provides uniform transformation in all present datasets.

Example:

Output:

Explanation:

- This code incorporates the OneHotEncoder function with handle_unknown=’ignore’ that allows the program to ignore missing categories instead of triggering an error.

- The system matches the encoding for all records consistently regardless of which categories appear in the test set or not.

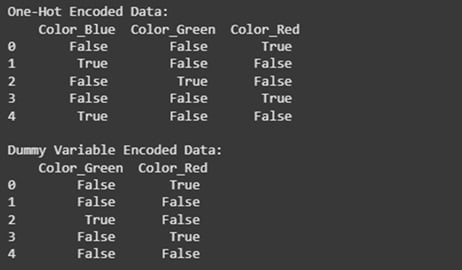

One-Hot Encoding vs. Dummy Variables

Both One-Hot Encoding and Dummy Variables are used interchangeably, but there is a difference between them. One-Hot Encoding creates a separate binary column for each category in a feature. But, dummy variables drop one category, which helps to avoid a variable trap.

For example, suppose we have a dataset that contains a “Color” column that contains values: “Red”, “Blue”, and “Green”.

After using One-Hot Encoding we get,

| Color | Red | Blue | Green |

| Red | 1 | 0 | 0 |

| Blue | 0 | 1 | 0 |

| Green | 0 | 0 | 1 |

Columb “Green” is dropped after using dummy variables.

| Color | Red | Blue |

| Red | 1 | 0 |

| Blue | 0 | 1 |

| Green | 0 | 0 |

Why should we drop a column?

We should drop a column because there is data redundancy, which causes multicollinearity in linear regression models. Hence, dropping of one category will help prevent this issue while it still preserves all the necessary information.

Example: Implementation in Python

Output:

Explanation:

The above code is used to create a DataFrame with a categorical “Color” column. It then applies one-hot encoding, and dummy variable encoding (drops one column to avoid data redundancy). It then prints both the encoded versions.

Impact of Missing Categories on Model Training

While training machine learning models, if some categories from the training data are missing from the test set or vice versa, this can lead to issues.

For example, if we train a model on the color categories [Red, Green, Blue], but the test set contains [Red, Blue], then the model might not be able to make predictions properly.

Example:

Output:

Explanation:

The above is used to apply one-hot encoding by applying both the training and the test datasets. But since the test dataset lacks the ‘Green’ category, the results don’t match in encoded feature columns between the two datasets.

Best Practices for Handling Dummy Variables

Some of the best practices for handling dummy variables are given below.

- You should always use reindex() to ensure that both the training set and the test set have the same dummy variables.

- You can use OneHotEncoder(handle_unknown=’ignore’) which helps avoid errors when unknown categories appear in test data.

- If it is possible, try to ensure that the training dataset contains all categories before encoding.

- You should also keep track of the feature names so that all the transformations across training and test data are consistent.

Conclusion

Machine learning practitioners need proper methods to process categorical data through dummy variables. Model prediction errors will occur when the test dataset contains missing values. The combination of reindex() in Pandas with OneHotEncoder(handle_unknown=’ignore’) in Scikit-learn provides a solution for handling missing value occurrences. Knowledge about dummy variables as well as one-hot encoding techniques enables users to prevent multicollinearity. Implementing best practices for consistent categorization tracking and strong encoding methods will result in machine learning models that maintain their performance stability despite missing categories in the data.

FAQs

1. Why do missing dummy variables cause errors?

If there is a missing category in the test data, which is present in the train data, the feature columns won’t match. This causes errors during prediction.

2. How can I prevent missing categories in my test data?

You can prevent missing categories in your test data by using reindex() in Pandas or OneHotEncoder(handle_unknown=’ignore) in Scikit-learn.

3. Is it necessary to one-hot encode categorical data?

Yes, it is necessary because for most machine learning models, it is necessary to convert categorical data into numerical form.

4. What happens if a new category appears in the test set but was not in the training set?

If a new category appears in the test set but not on the training set, then OneHotEncoder(handle_unknown=’ignore’) ignores the unseen categories, but models cannot learn from categories they haven’t seen before.

5. Can I use Label Encoding instead of Dummy Variables?

You can use Label Encoding for ordinal data (e.g., “Low”, “Medium”, “High”). And for non-ordinal data(e.g., “Red”, “Blue”, “Green”) you can use dummy variables.