If you’re an AI leader, you might feel like you’re stuck between a rock and a hard place lately.

You have to deliver value from generative AI (GenAI) to keep the board happy and stay ahead of the competition. But you also have to stay on top of the growing chaos, as new tools and ecosystems arrive on the market.

You also have to juggle new GenAI projects, use cases, and enthusiastic users across the organization. Oh, and data security. Your leadership doesn’t want to be the next cautionary tale of good AI gone bad.

If you’re being asked to prove ROI for GenAI but it feels more like you’re playing Whack-a-Mole, you’re not alone.

According to Deloitte, proving AI’s business value is the top challenge for AI leaders. Companies across the globe are struggling to move past prototyping to production. So, here’s how to get it done — and what you need to watch out for.

6 Roadblocks (and Solutions) to Realizing Business Value from GenAI

Roadblock #1. You Set Yourself Up For Vendor Lock-In

GenAI is moving crazy fast. New innovations — LLMs, vector databases, embedding models — are being created daily. So getting locked into a specific vendor right now doesn’t just risk your ROI a year from now. It could literally hold you back next week.

Let’s say you’re all in on one LLM provider right now. What if costs rise and you want to switch to a new provider or use different LLMs depending on your specific use cases? If you’re locked in, getting out could eat any cost savings that you’ve generated with your AI initiatives — and then some.

Solution: Choose a Versatile, Flexible Platform

Prevention is the best cure. To maximize your freedom and adaptability, choose solutions that make it easy for you to move your entire AI lifecycle, pipeline, data, vector databases, embedding models, and more – from one provider to another.

For instance, DataRobot gives you full control over your AI strategy — now, and in the future. Our open AI platform lets you maintain total flexibility, so you can use any LLM, vector database, or embedding model – and swap out underlying components as your needs change or the market evolves, without breaking production. We even give our customers the access to experiment with common LLMs, too.

Roadblock #2. Off-the-Grid Generative AI Creates Chaos

If you thought predictive AI was challenging to control, try GenAI on for size. Your data science team likely acts as a gatekeeper for predictive AI, but anyone can dabble with GenAI — and they will. Where your company might have 15 to 50 predictive models, at scale, you could well have 200+ generative AI models all over the organization at any given time.

Worse, you might not even know about some of them. “Off-the-grid” GenAI projects tend to escape leadership purview and expose your organization to significant risk.

While this enthusiastic use of AI can be a recipe for greater business value, in fact, the opposite is often true. Without a unifying strategy, GenAI can create soaring costs without delivering meaningful results.

Solution: Manage All of Your AI Assets in a Unified Platform

Fight back against this AI sprawl by getting all your AI artifacts housed in a single, easy-to-manage platform, regardless of who made them or where they were built. Create a single source of truth and system of record for your AI assets — the way you do, for instance, for your customer data.

Once you have your AI assets in the same place, then you’ll need to apply an LLMOps mentality:

- Create standardized governance and security policies that will apply to every GenAI model.

- Establish a process for monitoring key metrics about models and intervening when necessary.

- Build feedback loops to harness user feedback and continuously improve your GenAI applications.

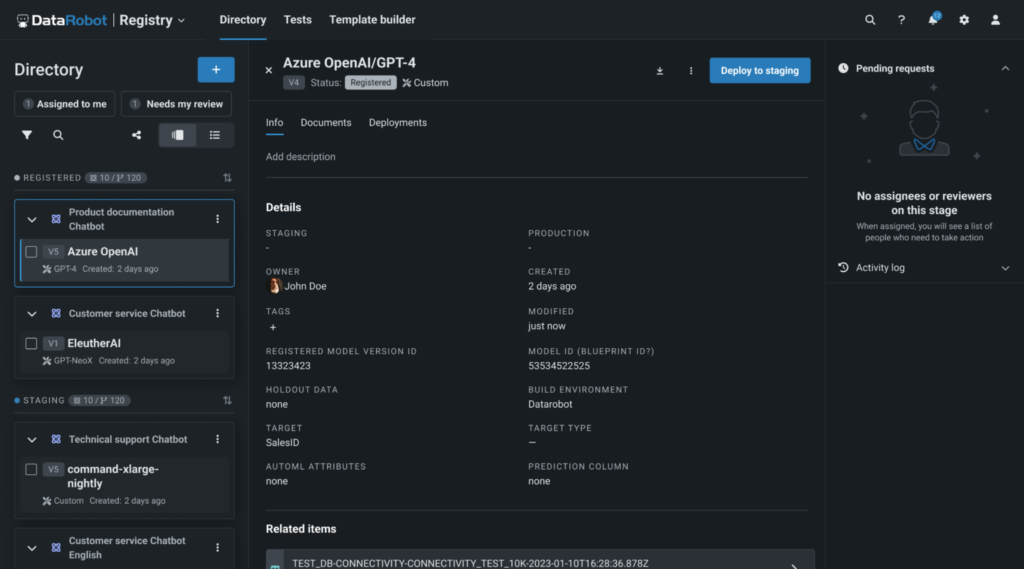

DataRobot does this all for you. With our AI Registry, you can organize, deploy, and manage all of your AI assets in the same location – generative and predictive, regardless of where they were built. Think of it as a single source of record for your entire AI landscape – what Salesforce did for your customer interactions, but for AI.

Roadblock #3. GenAI and Predictive AI Initiatives Aren’t Under the Same Roof

If you’re not integrating your generative and predictive AI models, you’re missing out. The power of these two technologies put together is a massive value driver, and businesses that successfully unite them will be able to realize and prove ROI more efficiently.

Here are just a few examples of what you could be doing if you combined your AI artifacts in a single unified system:

- Create a GenAI-based chatbot in Slack so that anyone in the organization can query predictive analytics models with natural language (Think, “Can you tell me how likely this customer is to churn?”). By combining the two types of AI technology, you surface your predictive analytics, bring them into the daily workflow, and make them far more valuable and accessible to the business.

- Use predictive models to control the way users interact with generative AI applications and reduce risk exposure. For instance, a predictive model could stop your GenAI tool from responding if a user gives it a prompt that has a high probability of returning an error or it could catch if someone’s using the application in a way it wasn’t intended.

- Set up a predictive AI model to inform your GenAI responses, and create powerful predictive apps that anyone can use. For example, your non-tech employees could ask natural language queries about sales forecasts for next year’s housing prices, and have a predictive analytics model feeding in accurate data.

- Trigger GenAI actions from predictive model results. For instance, if your predictive model predicts a customer is likely to churn, you could set it up to trigger your GenAI tool to draft an email that will go to that customer, or a call script for your sales rep to follow during their next outreach to save the account.

However, for many companies, this level of business value from AI is impossible because they have predictive and generative AI models siloed in different platforms.

Solution: Combine your GenAI and Predictive Models

With a system like DataRobot, you can bring all your GenAI and predictive AI models into one central location, so you can create unique AI applications that combine both technologies.

Not only that, but from inside the platform, you can set and track your business-critical metrics and monitor the ROI of each deployment to ensure their value, even for models running outside of the DataRobot AI Platform.

Roadblock #4. You Unknowingly Compromise on Governance

For many businesses, the primary purpose of GenAI is to save time — whether that’s reducing the hours spent on customer queries with a chatbot or creating automated summaries of team meetings.

However, this emphasis on speed often leads to corner-cutting on governance and monitoring. That doesn’t just set you up for reputational risk or future costs (when your brand takes a major hit as the result of a data leak, for instance.) It also means that you can’t measure the cost of or optimize the value you’re getting from your AI models right now.

Solution: Adopt a Solution to Protect Your Data and Uphold a Robust Governance Framework

To solve this issue, you’ll need to implement a proven AI governance tool ASAP to monitor and control your generative and predictive AI assets.

A solid AI governance solution and framework should include:

- Clear roles, so every team member involved in AI production knows who is responsible for what

- Access control, to limit data access and permissions for changes to models in production at the individual or role level and protect your company’s data

- Change and audit logs, to ensure legal and regulatory compliance and avoid fines

- Model documentation, so you can show that your models work and are fit for purpose

- A model inventory to govern, manage, and monitor your AI assets, irrespective of deployment or origin

Current best practice: Find an AI governance solution that can prevent data and information leaks by extending LLMs with company data.

The DataRobot platform includes these safeguards built-in, and the vector database builder lets you create specific vector databases for different use cases to better control employee access and make sure the responses are super relevant for each use case, all without leaking confidential information.

Roadblock #5. It’s Tough To Maintain AI Models Over Time

Lack of maintenance is one of the biggest impediments to seeing business results from GenAI, according to the same Deloitte report mentioned earlier. Without excellent upkeep, there’s no way to be confident that your models are performing as intended or delivering accurate responses that’ll help users make sound data-backed business decisions.

In short, building cool generative applications is a great starting point — but if you don’t have a centralized workflow for tracking metrics or continuously improving based on usage data or vector database quality, you’ll do one of two things:

- Spend a ton of time managing that infrastructure.

- Let your GenAI models decay over time.

Neither of those options is sustainable (or secure) long-term. Failing to guard against malicious activity or misuse of GenAI solutions will limit the future value of your AI investments almost instantaneously.

Solution: Make It Easy To Monitor Your AI Models

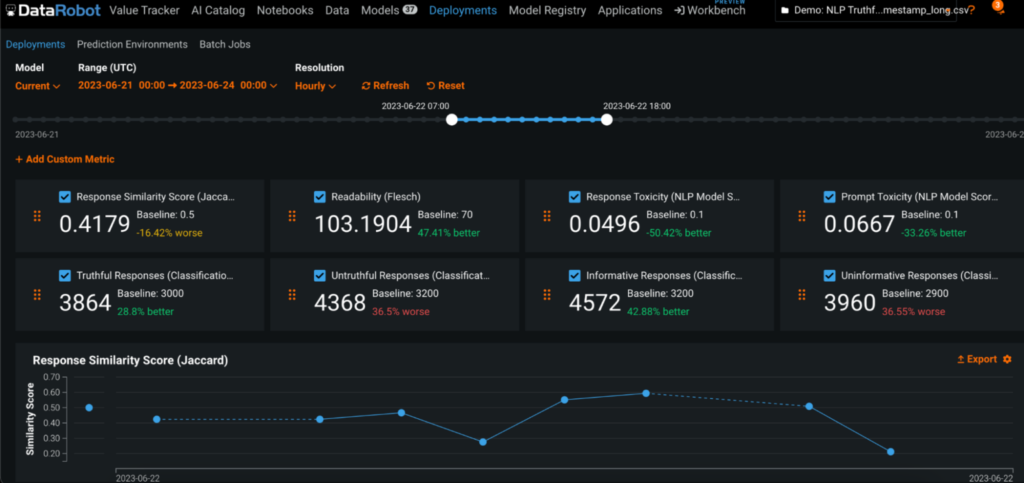

To be valuable, GenAI needs guardrails and steady monitoring. You need the AI tools available so that you can track:

- Employee and customer-generated prompts and queries over time to ensure your vector database is complete and up to date

- Whether your current LLM is (still) the best solution for your AI applications

- Your GenAI costs to make sure you’re still seeing a positive ROI

- When your models need retraining to stay relevant

DataRobot can give you that level of control. It brings all your generative and predictive AI applications and models into the same secure registry, and lets you:

- Set up custom performance metrics relevant to specific use cases

- Understand standard metrics like service health, data drift, and accuracy statistics

- Schedule monitoring jobs

- Set custom rules, notifications, and retraining settings. If you make it easy for your team to maintain your AI, you won’t start neglecting maintenance over time.

Roadblock #6. The Costs are Too High – or Too Hard to Track

Generative AI can come with some serious sticker shock. Naturally, business leaders feel reluctant to roll it out at a sufficient scale to see meaningful results or to spend heavily without recouping much in terms of business value.

Keeping GenAI costs under control is a huge challenge, especially if you don’t have real oversight over who is using your AI applications and why they’re using them.

Solution: Track Your GenAI Costs and Optimize for ROI

You need technology that lets you monitor costs and usage for each AI deployment. With DataRobot, you can track everything from the cost of an error to toxicity scores for your LLMs to your overall LLM costs. You can choose between LLMs depending on your application and optimize for cost-effectiveness.

That way, you’re never left wondering if you’re wasting money with GenAI — you can prove exactly what you’re using AI for and the business value you’re getting from each application.

Deliver Measurable AI Value with DataRobot

Proving business value from GenAI is not an impossible task with the right technology in place. A recent economic analysis by the Enterprise Strategy Group found that DataRobot can provide cost savings of 75% to 80% compared to using existing resources, giving you a 3.5x to 4.6x expected return on investment and accelerating time to initial value from AI by up to 83%.

DataRobot can help you maximize the ROI from your GenAI assets and:

- Mitigate the risk of GenAI data leaks and security breaches

- Keep costs under control

- Bring every single AI project across the organization into the same place

- Empower you to stay flexible and avoid vendor lock-in

- Make it easy to manage and maintain your AI models, regardless of origin or deployment

If you’re ready for GenAI that’s all value, not all talk, start your free trial today.

About the author

Joined DataRobot through the acquisition of Nutonian in 2017, where she works on DataRobot Time Series for accounts across all industries, including retail, finance, and biotech. Jessica studied Economics and Computer Science at Smith College.